In the 2025 web hosting comparison, I show how Bare Metal hosting versus virtualized hosting in terms of performance, security, scaling and costs. Based on typical workloads, I explain when hardware exclusivity is worthwhile and when VMs score points with agility.

Key points

The following key points will give you a quick overview for a direct comparison.

- PerformanceDirect hardware access delivers top values; virtualization brings low overhead.

- ScalingBare Metal grows hardware-bound; VM setups scale within minutes.

- SecurityPhysical separation for bare metal; strict segmentation required for multi-tenancy.

- CostsFixed rates for exclusive hardware; usage-based billing for VMs.

- Control systemFull control over bare metal; high level of automation in VM operation.

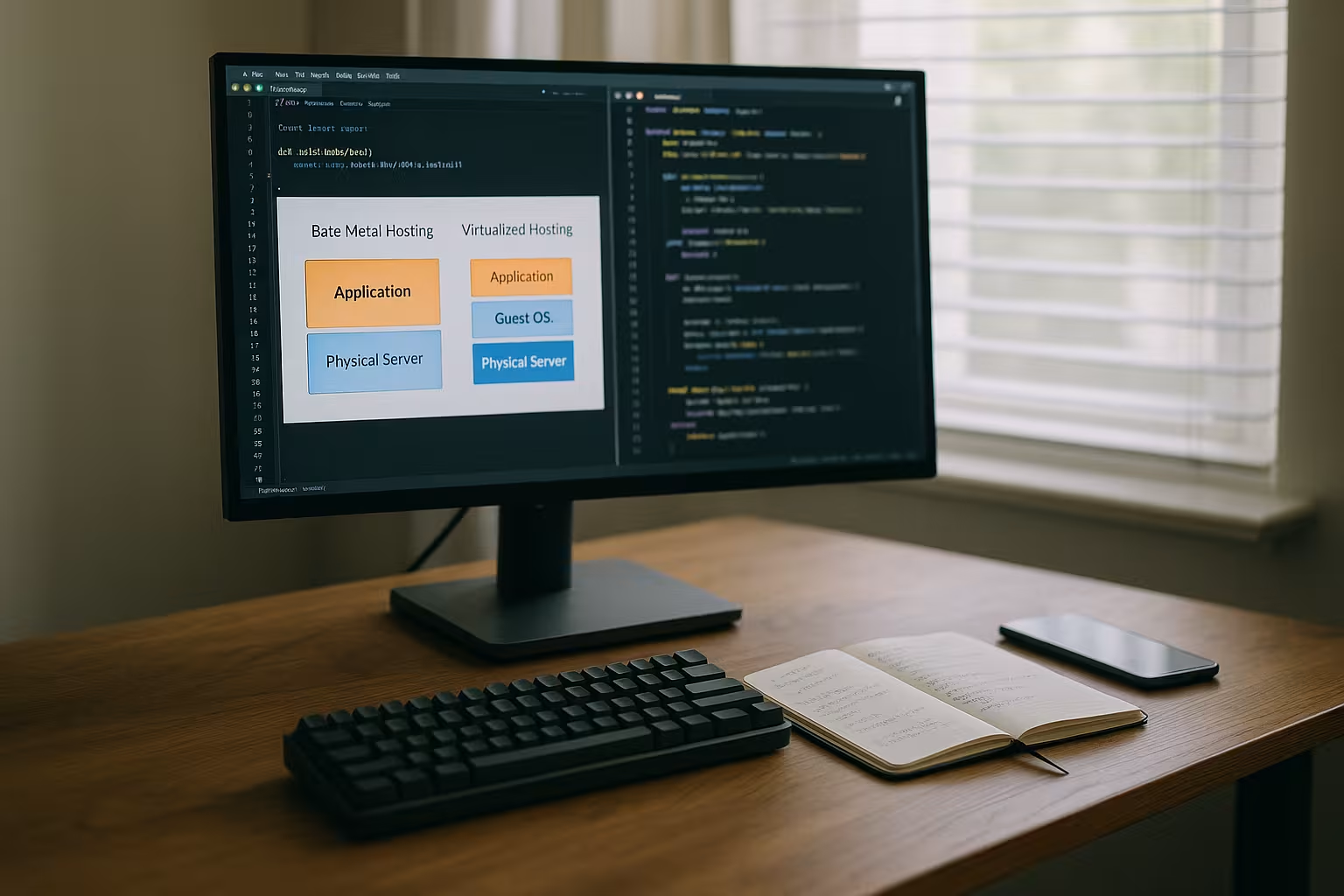

What does bare metal hosting really mean?

Bare Metal describes a physically dedicated server that I use exclusively. No hypervisor layer shares resources, which means that CPU, RAM and storage are completely mine. This exclusive use prevents the well-known noisy neighbor effect and ensures reproducible latencies. I choose the operating system, set my own security controls and can activate special hardware features. This provides maximum control, but requires specialist knowledge for patching, monitoring and recovery. Those who require compliant data storage and constant performance benefit in particular from Single Tenancybut pays higher fixed costs and accepts longer delivery times.

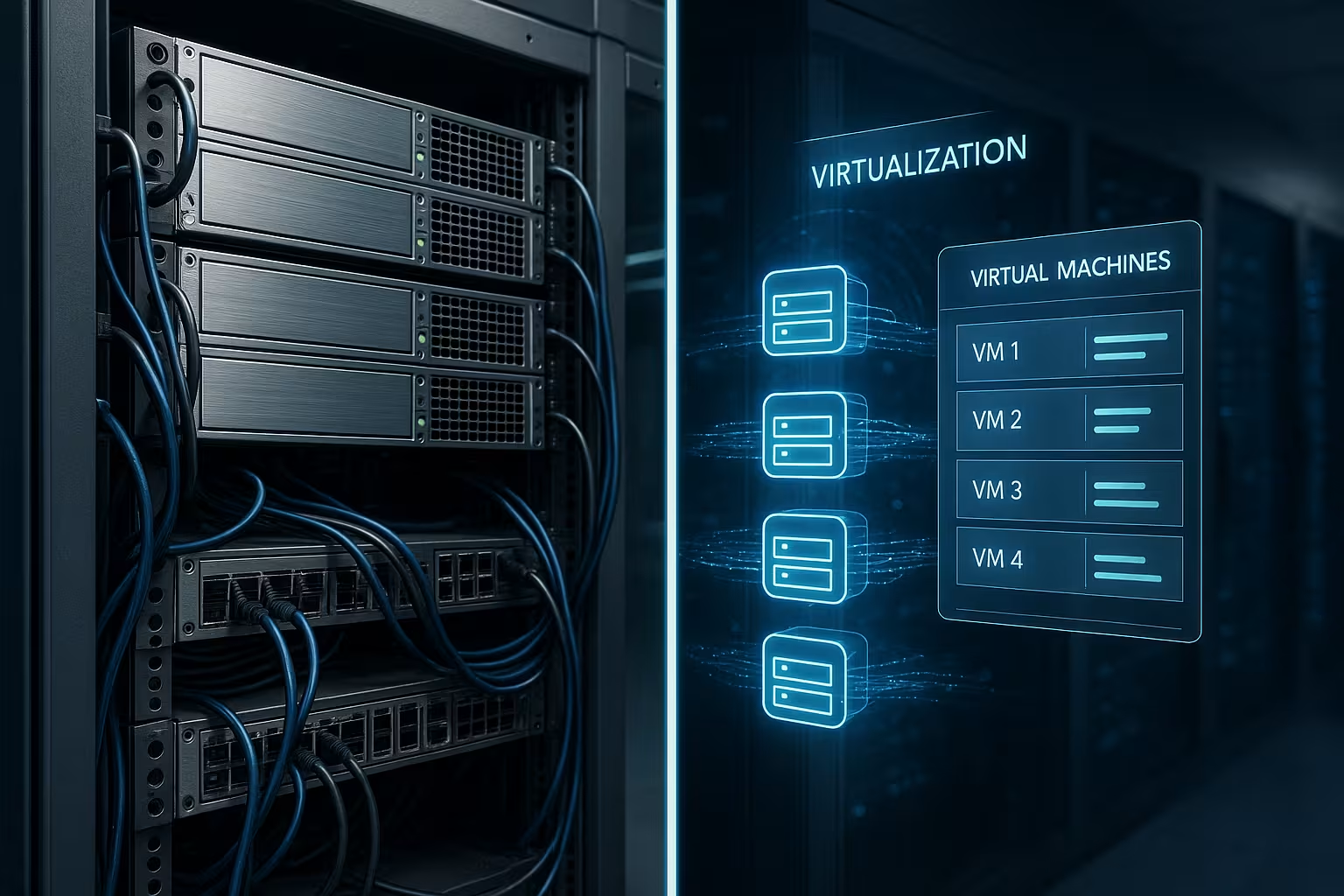

Virtualized hosting in practice

At VM Hosting a hypervisor divides the hardware into isolated instances, each with its own OS and defined resources. I start new machines in minutes, move workloads flexibly and benefit from snapshots, images and automation. This approach lowers entry costs and is mostly pay-as-you-go. At the same time, there is a low virtualization overhead and I have less influence on the base hardware. If you want to delve deeper, take a look at the basics of modern Server virtualization to understand the differences between type 1 and type 2 hypervisors. For dynamic applications, high agility counts, while Multi-tenancy clean segmentation is required.

Performance, latency and noisy neighbor

Performance remains the strongest argument in favor of bare metal hosting: direct hardware access shortens response times and increases throughput. Virtualized setups also deliver very high values today, but the hypervisor brings measurable, albeit small, additional latencies. Critical real-time bottlenecks occur above all when many VMs are competing for the same resource at the same time. In such situations, Bare Metal prevents unwanted spikes and keeps performance constant. For most web applications, however, VM performance is perfectly adequate, especially if resources are properly reserved and limits are set correctly. I therefore first evaluate workload character, I/O profiles and peaks before deciding on Exclusivity or virtualization.

Network, storage and hardware tuning

Whether bare metal or VM: the substructure determines whether the theory holds up in practice. I can rely on bare metal CPU pinning and NUMA Awareness to keep threads close to memory banks and minimize context switches. In VMs, I achieve a lot of this with vCPUs, which I bind to physical cores, activate huge pages and set IRQ affinity cleanly. Paravirtualized drivers (e.g. virtio) bring I/O close to native values. In the Network deliver 10/25/40/100 Gbit/s, jumbo frames, QoS and, if necessary, SR-IOV consistent latencies; bare metal allows kernel bypass stacks (DPDK) and finer NIC offloads. With the Storage NVMe latencies, RAID level, write cache (BBU/PLP), file systems (e.g. XFS, ZFS) and I/O schedulers decide on throughput and tail latency. Cluster storage (e.g. Ceph/NFS backends) provides elasticity, locally connected NVMe scores with maximum IOPS. I plan bottlenecks per layer: network, storage, CPU, RAM - and measure them separately before scaling.

A comparison of security and compliance

Security benefits on bare metal from physical separation: no client shares the platform, which reduces attack surfaces. I set hardening, network segmentation and encryption exactly as required. VM environments isolate guests via hypervisors; the correct configuration plays a decisive role here. Multi-tenancy requires clear security zones, patch discipline and monitoring to prevent lateral movement. Industries with strict requirements often choose bare metal, while many web projects run securely with cleanly hardened VMs. For highly sensitive data, I also check Hardware security-features such as TPM, Secure Boot and encrypted volumes.

Scaling and deployment

Scaling distinguishes both models particularly clearly. I expand bare metal capacities via hardware upgrades or additional servers, which means planning and delivery times. VM environments scale vertically and horizontally in a very short time, often automated via orchestration. This speed supports releases, blue/green switches and capacity peaks. Bare metal shines with permanent high loads without changing usage patterns. Those with unclear load profiles are often better off with VM pools, while predictable continuous loads have the advantage with Exclusive hardware see.

Containers and orchestration on bare metal vs. VM

Containers complement both worlds. On Bare Metal I get the lowest abstraction layer for Kubernetes, low latencies and direct access to accelerators (GPU/TPU, SmartNICs). On the other hand, I lack convenience functions such as live migration; I plan maintenance windows via rolling updates and pod disruption budgets. In VM clustering I get additional security and migration paths: control planes and workers can be migrated as VMs, guest systems can be frozen via snapshots and restored more quickly. Network overlays (CNI), storage drivers (CSI) and ingress layers determine the actual performance. I consciously select failure domains (racks, hosts, AZs) so that a failure does not affect the entire cluster and check whether the cluster autoscaler on VM pools or bare metal nodes works better.

Cost models and potential savings

Costs are structurally different. Bare metal ties the budget to fixed rates and is worthwhile with permanently high utilization. Virtualized hosting usually charges according to the resources used and relieves budgets when demand fluctuates. To make a transparent decision, I collect utilization data, evaluate load peaks and take operating costs into account. Automation, monitoring and backup are included in both models, but with different proportions of infrastructure and operation. The following table shows a compact overview of the features and cost structure that I regularly use to Workloads to be classified.

| Criterion | Bare Metal Hosting | Virtualized hosting |

|---|---|---|

| Performance | Maximum, constant, exclusive | Variable, depending on load and VM |

| Scalability | Slow, hardware-bound | Fast, on-demand |

| Security | Maximum physical separation | Good insulation, multi-tenancy requires hardening |

| Individualization | Complete, including hardware selection | Limited influence on basic hardware |

| Cost structure | Fixed monthly/annual installments | Pay-as-you-go according to resources |

| Management expenses | High, expert knowledge important | Low, largely automated |

Licensing and proprietary stacks

Licenses often have a greater influence on architecture than technology. Pro-Core or Pro-Socket Licensed databases and operating systems can benefit bare metal if I operate a few, heavily utilized hosts. In virtualization, I pay per VM, per vCPU or per host, depending on the model, but benefit from consolidation. Windows workloads with a data center license justify themselves with a high VM density; bare metal can be cheaper with a few, large instances. Important are License limits (cores, RAM) and mobility rights: Not every license allows free movement between hosts or to other data centers. I document mappings (workload → license) and plan reserves so that I can handle load peaks without violating the license.

Backup, disaster recovery and high availability

RPO/RTO define how much data loss and downtime is acceptable. In VM environments I achieve fast restarts with snapshots, replication and changed block tracking, ideal for app-consistent backups of databases. Bare Metal relies more on image backups, PXE restores or configuration automation for quick restarts. For critical services, I combine asynchronous replication, offsite copies and immutables Backup (Write-Once) to reduce ransomware risks. A practiced Runbook for failover and regular restore tests are mandatory - theory without practice doesn't count. I realize high availability with multi-AZ, load balancing and redundancy in every layer; the architecture determines whether failover takes seconds or minutes.

Energy, sustainability and efficiency

With a view to Sustainability utilization becomes more important. VMs consolidate fluctuating loads better - this increases the Performance-per-Watt. Bare metal is convincing when there is a permanently high workload or special accelerators increase efficiency. I take the PUE of the data center into account, Power cappingC-States/Turbo settings in the BIOS and generation changes for CPUs that deliver significantly more performance per watt. Rectangular load profiles (batch, nightly jobs) can be staggered in VM pools; bare metal can also save with precise sizing and sleep strategies. Anyone pursuing CO₂ budgets plans placement, measuring points and KPI reports from the outset.

Typical application scenarios 2025

Use cases set the tone in many projects. Permanently CPU- or I/O-intensive systems such as HPC, real-time analysis, financial streaming or game servers run particularly efficiently on dedicated hardware. Development, test and staging environments, on the other hand, benefit from fast VM rollouts, snapshots and low-cost standby. Web stores with highly fluctuating demand scale via VM clusters and keep costs variable. If you are wavering between a VPS and a dedicated machine, the Comparison of VPS vs. dedicated server further decision-making aids. For strict compliance, I often choose bare metal, while modern cloud workloads with auto-scaling under VM pools shine.

Migration and exit strategy

I plan early how I want to use platforms change can. P2V (Physical-to-Virtual), V2V and V2P reduce migration risks if drivers, kernel versions and boot modes are properly prepared. I replicate databases in advance so that I only lose seconds or minutes during the cutover. Blue/green setups and gradual traffic shifts reduce downtime. Compatibility lists (e.g. file system features, kernel modules), a defined fallback and measurement points are important: I compare latencies, throughput, error rates and costs before and after the switch. A documented exit strategy prevents vendor lock-in and speeds up reactions to price or compliance changes.

Decision tree: 7 questions before you buy

I start with Workload profilesAre load peaks rare or frequent and how severe are they? I then check latency requirements, for example for real-time handling or finance-related processes. The third question focuses on data sovereignty and certifications that may require physical separation. Then I look at the runtime: short-lived projects or long-term projects with a stable workload? Fifthly, I evaluate team know-how for patching, observability and recovery. Sixthly, I consider possible vendor locks in toolchains and hypervisor stacks. Finally, I compare budget paths: fixed rates for bare metal versus variable costs for Pay-as-you-go.

Sample calculations and TCO thinking

I calculate the total cost of ownership over 12-36 months and simulate two variants: 1) Bare Metal with fixed monthly installment, 2) VM cluster with usage-based billing. Assumptions: Base load, peak factor, operating times, data volume, backup frequency, support levels. Fixed costs (hardware/basic fee, housing, licenses) plus variable costs (traffic, storage IO, snapshots) result in the monthly balance. With 24/7 high load and stable utilization, the calculation typically tips in favor of bare metal; with strongly fluctuating demand, elastic VM pools pay off. I also evaluate Operating expenses (hours/month), downtime costs (€/minute) and risks (e.g. overprovisioning). Only with these figures does it become clear whether flexibility or exclusivity is economically viable.

Hybrid models and workload placement

Hybrid combines bare metal and VM instances to handle performance peaks and compliance in equal measure. I process critical core data on dedicated hardware, while scalable front ends run elastically on VMs. This separation enables clean cost control and reduces risks. A clean observability layer keeps both worlds visible and facilitates capacity planning. For role and rights concepts, I refer to the differences between vServer vs. root serverbecause access models often determine operating costs. When set up correctly, the setup prevents unnecessary bottlenecks and increases the Availability.

Choosing a provider: What I look out for

What counts for me in the selection process Transparency of resources and clear SLAs. I check the hardware generations, storage profiles, network topology and backups. Then there are support response times, automation features and image catalogs. Pricing models must be predictable and take reservations into account so that there are no surprises. Reference configurations for typical workloads help at the start and facilitate subsequent migrations. If you want consistent support, you should also look out for management options that take over routine tasks and Operating risks lower.

Checklist for proof of concept and operation

- Set SLI/SLOTarget values for latency p95/p99, availability, error rates, throughput.

- Load testsRealistic traffic profiles (burst, gradient, endurance test), database and cache hit rates.

- Security validation: Hardening guidelines, patch cycles, secret handling, network segments, logs.

- Data pathsBackup plan (3-2-1), measure restore time, check replication and encryption.

- ObservabilityStandardized metrics, traces and logs across both worlds (bare metal/VM).

- Change pathRolling/Blue-Green, maintenance windows, documenting and testing rollback scenarios.

- Cost controlsTagging, budgets, alerts; comparison target/actual per month.

- Capacity planningGrowth assumptions, headroom rules, reservations/commitments.

Briefly summarized

For Bare Metal speak for constant peak performance, full control and hard isolation. Virtualized environments score points with agile provisioning, flexible scaling and usage-related costs. I make my decisions based on the workload profile, compliance requirements and budget path. I like to move permanent high loads and sensitive data to dedicated servers; I prefer to run dynamic web projects and test cycles on VMs. A clever combination of the two results in predictable costs, fast releases and an architecture that grows with the project. The result is a solution that combines technology, security and Economic efficiency well balanced.