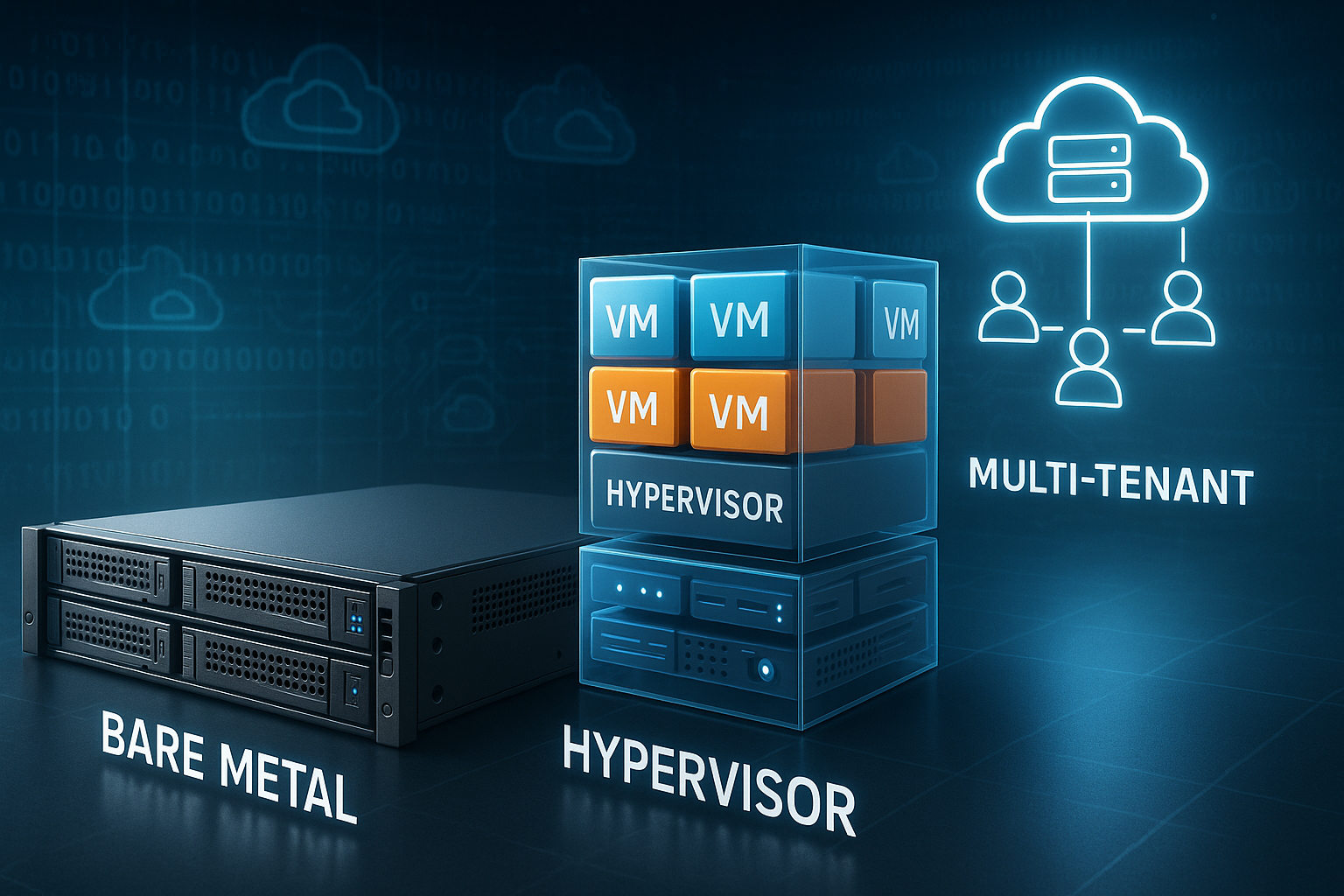

I explain the web hosting jargon surrounding Bare Metal, hypervisor and multi-tenant Concrete and practical. This allows you to immediately understand how the models work, how they differ, and which choice is right for your goals—from individual projects to platforms with many users.

Key points

- Bare Metal: Full hardware control and maximum performance.

- hypervisorVirtualization with clear isolation and flexibility.

- multi-tenant: Efficient use of resources through logical separation.

- Noisy NeighborManage performance cleanly and prevent problems.

- Hybrid: Separate sensitive loads, scale elastically.

Bare metal explained briefly

Bare Metal means: A physical server belongs exclusively to you. You don't share CPU, RAM, or SSD with anyone else. I determine the operating system, storage setup, and security features myself. This allows me to control every layer from the BIOS to the kernel. For sensitive data and peak loads, bare metal provides the most reliable reserves and the lowest latency.

The key factor is the absence of neighbors on the same hardware. This allows me to avoid the Noisy Neighboreffect completely. I plan capacity realistically and maintain consistent performance. Anyone coming from shared environments will notice the difference immediately. A quick introduction can be made with a comparison such as Shared hosting vs. dedicated hosting.

Hardware and network basics for resilient platforms

The foundation determines the scope for improvement. I choose modern CPUs with sufficient cores and strong single-thread performance, plus ECC RAM for integrity. For data paths, I rely on NVMe SSDs with high IOPS density and plan dedicated RAID levels or ZFS profiles to suit the workload. Network cards with SR-IOV reduce overhead and enable stable latencies even at high throughput. 25/40/100 GbE provides reserves for replication, storage traffic, and east-west communication.

With bare metal, I draw directly on hardware features. In virtualized stacks, I use passthrough in a targeted manner: directly connecting NVMe, passing SR-IOV VFs to VMs, CPUs with CPU pinning In multi-tenant operations, I deliberately limit such privileges to ensure fairness and isolation. A well-thought-out topology design (leaf-spine, separate VLANs, dedicated management networks) prevents bottlenecks and simplifies troubleshooting.

Hypervisor: Type 1 vs. Type 2 in practice

A hypervisor is the virtualization layer between hardware and VMs. Type 1 runs directly on the machine and minimizes overhead. Type 2 sits on top of an existing operating system and is well suited for testing. I usually rely on Type 1 for production because isolation and efficiency are important. For lab setups, I use Type 2 because it is easy to use.

CPU pinning, NUMA awareness, and storage caching are important. I use these levers to control latency and throughput. Snapshots, live migration, and HA functions significantly reduce downtime. I choose features based on workload, not marketing terms. This keeps the Virtualization predictable and efficient.

Storage strategies and data layout

Storage determines perceived speed. I separate workloads according to access profile: transactional databases on fast NVMe pools with low latency, analytical jobs on high-bandwidth storage with high sequential performance. Write-back caching I only use it with battery/capacitor backups, otherwise there is a risk of data loss. TRIM and correct queue depths keep SSDs performing well in the long term.

In virtualized environments, I choose between local storage (low latency, but tricky HA) and shared storage (easier migration, but network hop). Solutions such as block-level replication, Thin Provisioning Strict monitoring and separate storage tiers (hot/warm/cold) help balance costs and performance. For backups, I use immutable repositories and test regular restores—not just checksums, but actual system restarts.

Multi-tenant made understandable

multi-tenant This means that many clients share the same infrastructure but remain logically separate. I segment resources cleanly and define quotas. Security boundaries at the network, hypervisor, and application levels protect data. Monitoring tracks load, I/O, and unusual patterns. This allows me to keep costs manageable and respond flexibly to peaks.

The strength lies in flexibility. I can allocate or release capacity at short notice. Pay-as-you-go models reduce fixed costs and encourage experimentation. At the same time, I set strict limits to prevent abuse. With clear Policies Scales multi-tenant securely and predictably.

Resource planning: Consciously controlling overcommitment

Overcommitment is not taboo, but rather a tool. I define clear upper limits: moderate CPU overcommitment (e.g., 1:2 to 1:4, depending on the workload), little to no RAM (memory ballooning only for calculated loads), and storage overcommitment with close telemetry. Huge Pages stabilize memory-intensive services, NUMA binding Prevents cross-socket latencies. I see swap as an airbag, not a driving mode—allocated RAM budgets must be sufficient.

- CPU: Pin critical cores, reserve host cores for hypervisor tasks.

- RAM: Use reservations and limits to avoid uncontrolled ballooning.

- Storage: Plan IOPS budgets per client and set I/O schedulers to match the profile.

- Network: QoS per queue, SR-IOV for latency, dedicated paths for storage.

Noisy Neighbor, Isolation, and Noticeable Performance

I bend Noisy Neighbor CPU limits, I/O caps, and network QoS protect services from external loads. Dedicated storage pools separate latency-critical data. Separate vSwitches and firewalls exclude cross-traffic. I test scenarios with load generators and measure the effects during operation.

Transparency builds trust. I use metrics such as P95 and P99 latency instead of average values. Alerts respond to jitter, not just failures. This allows me to identify bottlenecks early on and take action. Clients remain isolated, and the User experience remains constant.

Observability, testing, and resilient SLOs

I measure systematically: metrics, logs, and traces all come together. For services, I use the RED method (rate, errors, duration), and for platforms, I use the USE method (utilization, saturation, errors). I define SLOs per service—for example, 99.9% with P95 latency below 150 ms—and link them to alerts on Error budgets. This way, I avoid alarm floods and focus on user impact.

Before making changes, I run load tests: baseline, stress, spike, and soak. I check how latencies behave under congestion and where backpressure takes effect. Chaos experiments Verify at the network, storage, and process levels whether self-healing and failover are truly effective. Synthetic checks from multiple regions detect DNS, TLS, or routing errors before users notice them.

Comparison: Bare metal, virtualization, and multi-tenant

I rank hosting models based on control, performance, security, scalability, and price. If you want maximum control, go for Bare Metal. If you want to remain flexible, choose Type 1-based virtualization. Multi-tenant is worthwhile for dynamic teams and variable loads. The following table shows the differences at a glance.

| Criterion | Bare Metal | Virtualized | multi-tenant |

|---|---|---|---|

| resource control | Exclusive, full sovereignty | VM-based, finely controllable | Assigned by software |

| Performance | Very high, hardly any overhead | High, low overhead | Varies depending on density |

| Security | Physically separated | Isolated by hypervisor | Logical separation, policies |

| Scaling | Hardware-bound | Quickly via VMs | Very flexible and fast |

| Price | Higher, predictable | Medium, depending on usage | Cheap to moderate |

| Typical applications | Compliance, high load | All-round, Dev/Prod | SaaS, dynamic projects |

I never make decisions in isolation. I take into account application architecture, team expertise, and budget. Backups, DR plans, and observability are all factored in. This keeps the platform manageable and scalable. Long-term operating costs are just as important as short-term rent.

Operating models and automation

I automate from day one. Infrastructure as Code defines networks, hosts, policies, and quotas. Golden Images Signed Baselines reduce drift. CI/CD pipelines build images reproducibly, roll certificates, and initiate canary rollouts. For recurring tasks, I schedule maintenance windows, announce them early, and keep rollback paths ready.

I control configuration drift with periodic audits and desired target states. Changes are implemented in the platform via change processes—small, reversible, and observable. I manage secrets in versions, with rotation and short-lived tokens. This keeps operations fast and secure at the same time.

Planning costs, scaling, and SLAs for everyday use

I factor in not only hardware, but also operation, licenses, and support. For bare metal, I plan buffers for spare parts and maintenance windows. In multi-tenant environments, I calculate variable load and possible reserves. A clear SLA protects targets for availability and response times. This keeps costs and Service in the lot.

I start scaling conservatively. I scale vertically as long as it makes sense, and then horizontally. Caching, CDNs, and database sharding stabilize response times. I measure effects before rollout in staging. Then I set the appropriate Limits productive.

Plan migration cleanly and minimize lock-in

I start with an inventory: dependencies, data volumes, latency requirements. Then I decide between Lift and shift (fast, minimal conversion), re-platforming (new basis, same app), and refactoring (more effort, but most effective in the long term). I synchronize data with continuous replication, final cutover, and clear fallback levels. If necessary, I plan downtime to be short and at night—with a meticulous runbook.

To combat vendor lock-in, I rely on open formats, standardized images, and abstracted network and storage layers. I maintain exit plans: How do I export data? How do I replicate identities? What steps need to be taken and in what order? This keeps the platform flexible—even when the environment changes.

Financial management (FinOps) in everyday life

I actively control costs. I set utilization targets per layer (e.g., 60–70% CPU, 50–60% RAM, 40–50% storage IOPS), tag resources clearly, and create transparency across teams. right-sizing I eliminate idle time and only use reservations when the base load is stable. I handle bursts flexibly. Showback/chargeback motivates teams to respect budgets and request capacity sensibly.

Virtualization or containers?

I compare virtual machines with dumpster diving by density, startup time, and isolation. Containers start faster and use resources efficiently. VMs provide stronger separation and flexible guest operating systems. Hybrid forms are common: containers on VMs with Type 1 hypervisors. I'll show you more about this in my guide. Containers or VMs.

The purpose of the application is important. If it requires kernel functions, I use VMs. If it needs many short-lived instances, I use containers. I secure both worlds with image policies and signatures. I separate network segments in a finely granular manner. This keeps deployments fast and clean.

Using hybrid models sensibly

I separate sensitive core data Bare Metal and operate elastic front ends in a virtualized or multi-tenant cluster. This allows me to combine security with agility. I handle traffic spikes with auto-scaling and caches. I secure data flows with separate subnets and encrypted links. This reduces risk and keeps costs under control.

A practical comparison shows whether the mix is right, as Bare metal vs. virtualized. I start with clear SLOs for each service. Then I set capacity targets and escalation paths. I test failover realistically and regularly. This ensures that the interaction remains Reliable.

Security, compliance, and monitoring on equal footing

I treat Security Not as an add-on, but as an integral part of operations. Hardening starts with the BIOS and ends with the code. I manage secrets centrally and version them. Zero-trust networks, MFA, and role-based access are standard. Patching follows fixed cycles with clear maintenance windows.

I implement compliance with logging, tracing, and audit trails. I collect logs centrally and correlate events. I prioritize alarms according to risk, not quantity. Drills keep the team responsive. This ensures that the platform remains verifiable and transparent.

Data residency, deletion concepts, and key management

I clearly define where data may be stored and which paths it may take. Encryption at rest and in transit are standard, and I manage keys separately from the storage location. I use BYOK/HYOK models when separation of operator and data holder is required. Traceable processes apply to deletions: from logical deletion to cryptographic destruction to physically secure disposal of data carriers. This is how I meet data protection and verifiability requirements.

Energy efficiency and sustainability

I plan with efficiency in mind. Modern CPUs with good performance-per-watt values, dense NVMe configurations, and efficient power supplies reduce consumption. Consolidation is better than islands: better to have a few well-utilized hosts than many half-empty ones. I optimize cooling and airflow via rack arrangement and temperature zones. Measurement is mandatory: power metrics flow into capacity and cost models. This allows me to save energy without sacrificing performance.

Summary: Using web hosting jargon with confidence

I use Bare Metal, when full control, consistent performance, and physical separation are crucial. For flexible projects, I rely on hypervisor-based virtualization and combine it with containers as needed. I choose multi-tenant when elasticity and cost efficiency are priorities and good isolation is a given. Hybrid combines the strengths, separates sensitive parts, and scales dynamically at the edge. With clear metrics, automation, and discipline, web hosting jargon is not a hurdle, but a toolbox for stable, fast platforms.