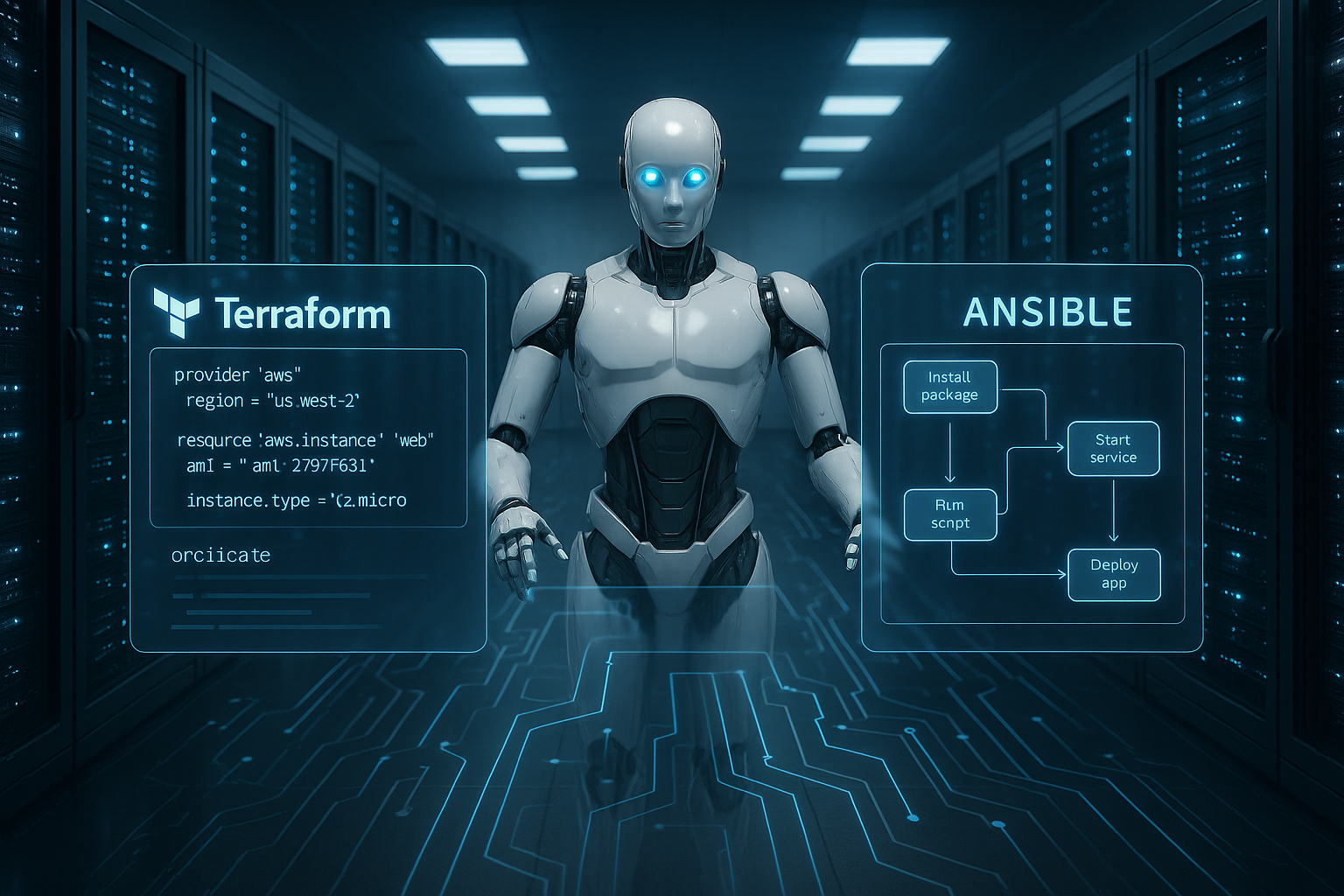

I show how Terraform Ansible interacts in hosting: Terraform builds infrastructure reproducibly, Ansible efficiently reconfigures servers, services and apps. This is how I automate provisioning, configuration and lifecycle management end-to-end - from the VM to the WordPress stack.

Key points

- IaC approachDefine infrastructure as code and roll it out in a repeatable manner

- Role clarificationTerraform for resources, Ansible for configuration

- WorkflowDay 0 with Terraform, Day 1/2 with Ansible

- QualityConsistency, traceability, fewer errors

- ScalingMulti-cloud, modules, playbooks and pipelines

Automated infrastructure provisioning in hosting explained in brief

I rely on Infrastructure he code to create servers, networks and storage declaratively rather than manually. This allows me to document every desired target state as code and deploy it securely. The benefits are obvious: I provide hosting environments more quickly, keep them consistent and reduce typing errors. For WordPress or store setups in particular, this saves me time on recurring tasks. Evaluable statuses, reproducible deployments and clean deletion processes ensure more Transparency costs and governance.

Terraform: Roll out infrastructure in a plannable way

I use Terraform to describe resources in HCL as Modules and record states in the state file. This allows me to plan changes in advance, recognize the effects and implement them in a controlled manner. Multi-cloud scenarios remain possible, as providers are available for common platforms. I standardize networks, compute, databases and load balancers using reusable modules. For beginners, it is worth taking a look at the Terraform basics, to master syntax, state handling and policies.

For teams, I separate states per environment (Dev/Staging/Prod) via Workspaces and remote backends with locking. Clean tagging, clearly defined variables and a consistent folder structure (e.g. envs/, modules/, live/) prevent uncontrolled growth. I integrate sensitive provider and variable values via KMS/Vault and keep them out of the code repository. This keeps deployments reproducible and auditable, even if several operators are working on the platform in parallel.

Bootstrap and access: Cloud-Init, SSH and Bastion

After provisioning I use Cloud-Init or user data to set basic configurations directly on initial startup: Hostname, time synchronization, package sources, initial users and SSH keys. For isolated networks, I operate a Bastion (Jump Host) and route all Ansible connections via ProxyCommand or SSH configuration. In this way, I keep productive subnets private and still use agentless automation. I describe the necessary firewalls and security groups in Terraform so that access remains minimal and traceable.

Ansible: Securely automate configuration and orchestration

After deployment, Ansible takes over the Configuration management agentless via SSH. I write playbooks in YAML and describe steps for packages, services, users, rights and templates. Idempotent tasks guarantee that repeated runs maintain the target state. This is how I install PHP, databases, caches, TLS certificates and monitoring without manual work. For deployments, I combine roles, variables and inventories to keep staging, testing and production consistent and Drift to avoid.

In everyday life I use Handlers consistently to restart services only when relevant changes occur, and validate templates with check_mode and diff. For large fleets, I use parallelization via forks with batch sizes and dependencies that I control via serialization or tags. This keeps changes low-risk and traceable.

Terraform vs. Ansible at a glance

I separate tasks clearly: Terraform takes care of creating and changing resources, Ansible configures systems running on them. This separation reduces errors, speeds up changes and increases the overview. Declaration in Terraform fits perfectly with the plan-only approach for VMs, networks and services. Procedural tasks in Ansible cover installations, file changes, restarts and deployments. Together, this guarantees a clean Role allocation and short distances for changes.

| Feature | Terraform | Ansible |

|---|---|---|

| Objective | Resource provisioning (Day 0) | Configuration & Orchestration (Day 1/2) |

| Approach | Declarative (target state) | Procedural (steps/tasks) |

| State | State file available | Stateless (idempotency) |

| Focus | VMs, networks, databases, LB | Packages, services, deployments, security |

| Agents | Without agent | Typically agentless via SSH |

| Scaling | Multi-cloud provider | Roles, inventories, parallelization |

Outputs and dynamic inventories

So that Ansible knows exactly which hosts are to be configured, I transfer Terraform outputs directly into an inventory. I export IPs, host names, roles and labels as structured values and use host groups generated from them. In this way, inventories remain synchronized with the real state at all times. A simple approach is to write the outputs as JSON and export them with Ansible as YAML/JSON inventory to read in. This allows me to close the gap between provisioning and configuration without manual intermediate steps.

How Terraform and Ansible work together

I start with Terraform and create networks, subnets, security rules, VMs and management access; I pass the IPs and hostnames created on to Ansible. I then use playbooks to install operating system packages, agents, web servers, PHP-FPM, databases and caching layers. I implement policies such as password rules, firewall rules and protocol rotations automatically and keep them consistent. When scaling, I connect new instances via Terraform and let Ansible take over the configuration. At the end, I remove resources in a controlled manner to cleanly resolve dependencies and Costs transparent.

WordPress hosting: example from practice

For a WordPress setup, I define VPC, subnets, routing, security groups, database instances and an autoscaling web cluster in Terraform. Ansible then sets up NGINX or Apache, PHP extensions, MariaDB/MySQL parameters, object cache and TLS. I deploy the WordPress installation, configure FPM-Worker, activate HTTP/2 and secure wp-config with the appropriate file permissions. I also automate Fail2ban, Logrotate, backup jobs and metrics for load, RAM, I/O and Latency. This gives me repeatable deployments with clear rollback paths and fast recovery.

For risk-free updates, I rely on Blue/Green or rolling deployments: New web instances are set up in parallel, configured, tested and only then connected behind the load balancer. I handle database changes carefully with migration windows, read replicas and backups. I include static assets, cache heat and CDN rules in the playbooks so that switchovers run without surprises.

Mastering state, drift and safety

I store the Terraform state file centrally with version control and a locking mechanism so that nobody overwrites changes at the same time. I document planned deviations using variables, and I fix unwanted drift using a plan and subsequent apply. For secrets, I use Vault or KMS integrations, while Ansible remains sensitive with encrypted variables. Playbooks contain security baselines that I regularly enforce against new hosts. I keep logs, metrics and alerts consistent so that I can Incidents recognize and understand them more quickly.

I also check Tagging and naming conventions Strict: resources receive mandatory labels for cost centers, environments and responsible parties. This facilitates FinOps evaluations, lifecycle policies (e.g. automatic shutdown of non-productive systems) and makes compliance audits easier. For sensitive changes, I rely on Change Windows with an approved Terraform plan and documented Ansible runs.

Policy as Code, Compliance and Governance

I anchor Policies in the code: Which regions are allowed, which instance types, which network segments? I enforce conventions via modules and validations. I run policy checks before every apply so that deviations are noticed early on. For Ansible, I define security benchmarks (e.g. SSH hardening, password and audit policies) as roles that apply consistently on all hosts. In this way, governance requirements remain measurable and exceptions are deliberately documented instead of being tolerated by chance.

Thinking containers, Kubernetes and IaC together

Many hosting teams combine VMs for databases with containers for web processes to optimize density and startup times. I model both with Terraform and leave host hardening, runtime installation and registry access to Ansible. For cluster workloads, I compare orchestration concepts and decide which approach suits the governance. If you want to find out more about this, see the article Docker vs. Kubernetes useful considerations. It remains important: I keep deployments reproducible and secure. Images against drift so that releases remain reliable.

In hybrid setups, I define clusters, node groups and storage with Terraform, while Ansible standardizes the base OS layer. Access to container registries, secrets and network policies are part of the playbooks. This means that even a mixed stack of database VMs and container-based web frontends remain in a consistent lifecycle.

CI/CD, tests and rollbacks

I integrate Terraform and Ansible runs into pipelines so that changes are automatically checked, planned and rolled out with minimal errors. I protect unit and lint checks with quality gates, plans and dry runs give me transparency before each apply. For playbooks, I use test environments to validate handlers, idempotency and dependencies cleanly. Clear rollback strategies and versioning of modules and roles speed up troubleshooting. If you want to get started, you can find inspiration in CI/CD pipelines in hosting and can use their own Workflows expand step by step.

Performance and scaling of the pipeline

For large fleets, I scale Terraform with well-dosed parallelization and granular targets without tearing up the architecture. I describe dependencies explicitly to avoid race conditions. In Ansible I control forks, serial and max_fail_percentage, to safely roll out changes in waves. Caching (facts, package cache, galaxy roles) and reusable artifacts noticeably reduce runtimes. This keeps delivery fast without sacrificing reliability.

Practical recommendations for getting started

I start small: a repo, clear folder structure, naming conventions and versioning. Then I define a minimal environment with a network, a VM and a simple web role to practice the entire flow. I set up variables, secrets and remote states early on so that later team steps run smoothly. I then modularize according to components such as VPC, compute, DB, LB and roles for web, DB and monitoring. This gradually creates a reusable Library of modules and playbooks that securely map releases.

Migration of existing environments

Many teams do not start on a greenfield site. I proceed step by step: First, I take an inventory of manually created resources and transfer them via Import in Terraform, accompanied by modules that correspond to the target image. At the same time, I introduce Ansible roles that reproduce the current state and then gradually raise it to the desired standard configuration. In this way, I avoid big bang projects and reduce risks through controlled, traceable changes.

Troubleshooting and typical error patterns

In practice, I see recurring patterns: Creating manual hotfixes Drift, which is reversed during the next run. Clear processes (tickets, PRs, reviews) and regular runs help to detect deviations early on. In Ansible, non-idempotent tasks lead to unnecessary restarts; I check modules instead of shell commands and set changed_when/failed_when in a targeted manner. I clarify network issues (bastion, security groups, DNS) at an early stage so that connections are stable. And I log every run so that I can fully trace the causes in audits.

Summary: What really counts

I automate the provisioning of the infrastructure with Terraform and leave the configuration to Ansible. The separation of tasks ensures consistency, speed and fewer human errors. Modules, roles and policies make deployments manageable and auditable. Those who take this approach save time, reduce risks and gain scalability across clouds and environments. What counts in the end is traceable Processes, that make every change visible, testable and repeatable.