Container security in hosting is about risk, liability and trust. I show in a practical way how I make Docker and Kubernetes environments hard so that Hoster Reduce attack surfaces and cleanly contain incidents.

Key points

The following key aspects guide my decisions and priorities when it comes to Container security. They provide a direct starting point for hosting teams who want to measurably reduce risks.

- Harden imagesKeep to a minimum, scan regularly, never start as root.

- RBAC strictCut rights small, audit logs active, no uncontrolled growth.

- Disconnect networkDefault-deny, limit east-west traffic, check policies.

- Runtime protectionMonitoring, EDR/eBPF, early detection of anomalies.

- Backup & RecoveryPractice snapshots, save secrets, test recovery.

I prioritize these points because they have the greatest leverage on the real Risk reduction offer. Those who work rigorously here close the most common gaps in everyday cluster life.

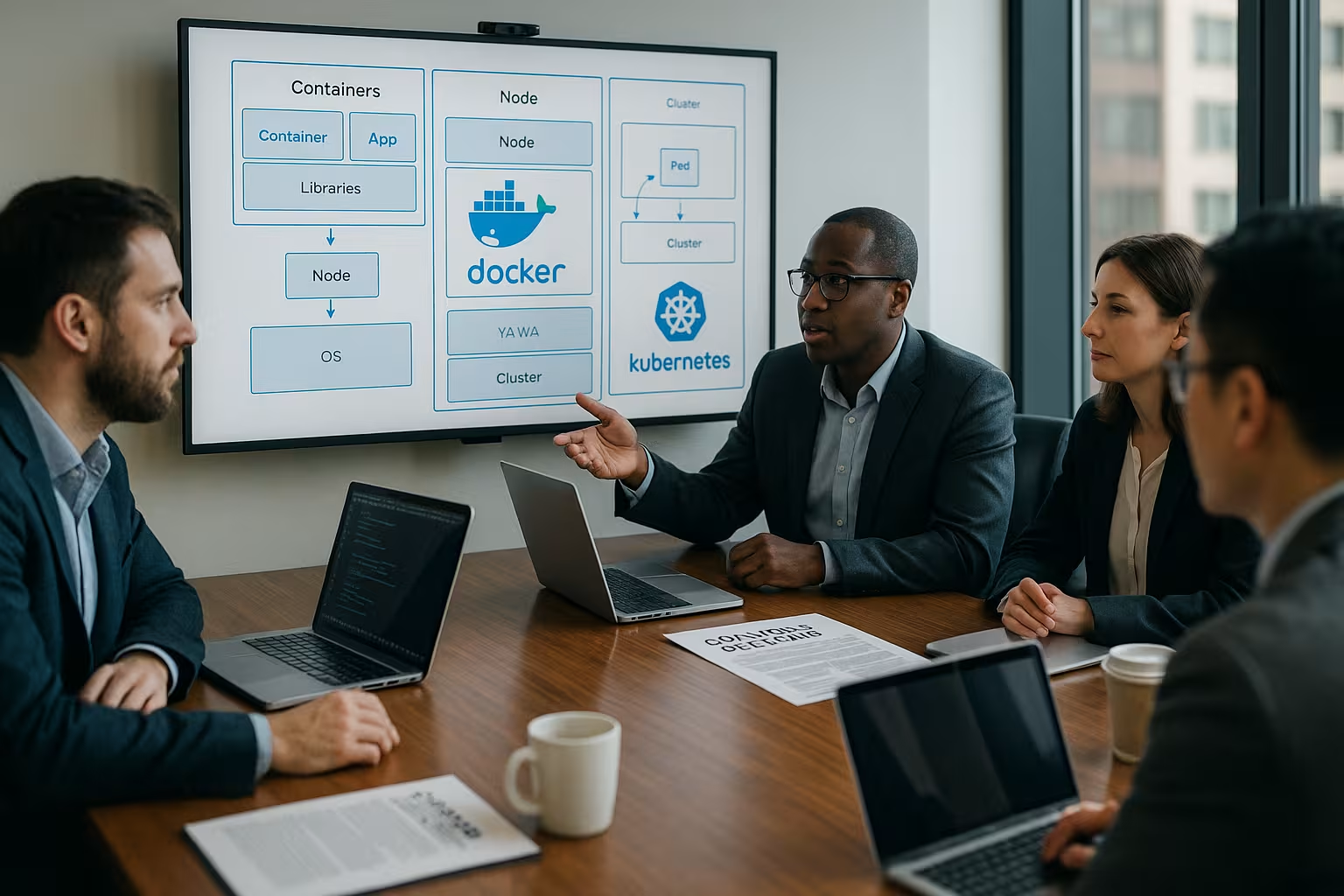

Why safety is different in containers

Several containers share a kernel, so an error often tips over into Sideways movements around. An unclean base image multiplies vulnerabilities across dozens of deployments. Misconfigurations such as overly broad permissions or open sockets leverage the host in minutes. I plan defenses in multiple layers: from build to registry, admission, network to Runtime. As a starting point, it is worth taking a look at Isolated hosting environmentsbecause isolation and least privilege are clearly measurable here.

Operating Docker securely: Images, Daemon, Network

I use minimalist, tested Base images and move configuration and secrets to runtime. Containers do not run as root, Linux capabilities are reduced and sensitive files do not end up in the image. The Docker daemon remains isolated, I only set API endpoints with strong TLS-protection. I never mount the socket in production containers. On the network side, least privilege applies: inbound and outbound only explicitly permitted connections, flanked by firewall rules and L7 logs.

Kubernetes hardening: RBAC, namespaces, policies

In Kubernetes, I define roles granularly with RBAC and check them cyclically by audit. Namespaces separate workloads, clients and sensitivities. NetworkPolicies take a default-deny approach and only open what a service really needs. Per pod, I set SecurityContext options such as runAsNonRoot, prohibit Privilege Escalation and drop Capabilities like NET_RAW. Admission controls with OPA Gatekeeper prevent faulty deployments from entering the cluster.

CI/CD pipeline: Scan, sign, block

I integrate vulnerability scans for Container images into the pipeline and block builds with critical findings. Image signing creates integrity and traceability back to the source. Policy-as-code enforces minimum standards, such as no :latest tags, no privileged pods and defined user IDs. The registry itself also needs protection: private repos, unchangeable tags and access only for authorized users. Service accounts. In this way, the supply chain stops faulty artifacts before they reach the cluster.

Network segmentation and East-West protection

I limit sideways movements by setting hard limits in the Cluster network. Microsegmentation at namespace and app level reduces the scope of an intrusion. I document ingress and egress controls as code and version changes. I describe service-to-service communication in detail, monitor anomalies and block suspicious behavior immediately. TLS in the pod network and stable identities through service identities tighten the Protection continue.

Monitoring, logging and rapid response

I collect metrics, logs and events in real time and rely on Anomaly detection instead of just static threshold values. Signals from API servers, Kubelet, CNI, Ingress and workloads flow into a central SIEM. eBPF-based sensors detect suspicious syscalls, file accesses or container escapes. I have runbooks ready for incidents: isolate, forensically back up, rotate, restore. Without practiced Playbooks good tools fall flat in an emergency.

Secrets, compliance and backups

I store secrets in encrypted form, rotate them regularly and limit their Service life. I implement KMS/HSM-supported procedures and ensure clear responsibilities. I back up data storage regularly and test the restore in a realistic manner. I seal Kubernetes objects, CRDs and storage snapshots against manipulation. Who Docker hosting should contractually clarify how key material, backup cycles and restore times are regulated so that Audit and operation fit together.

Frequent misconfigurations and direct countermeasures

Container with root user, missing readOnlyRootFilesystem-flags or open host paths are classics. I consistently remove privileged pods and do not use HostNetwork and HostPID. I assess exposed Docker sockets as a critical gap and eliminate them. I swap default-allow networks for clear policies that define and check communication. Admission controls block risky manifests before they are run.

Practical hardening of the Docker daemon

I deactivate unused remote APIs, activate Client certificates and place a firewall in front of the engine. The daemon runs with AppArmor/SELinux profiles, Auditd records security-relevant actions. I separate namespaces and cgroups cleanly to enforce resource control. I write logs to centralized backends and keep an eye on rotations. Host hardening remains mandatory: kernel updates, minimization of Package scope and no unnecessary services.

Provider selection: Security, managed services and comparison

I rate providers according to technical depth, Transparency and auditability. This includes certifications, hardening guidelines, response times and recovery tests. Managed platforms should offer admission policies, provide image scanning and deliver clear RBAC templates. If you are still unsure, you can find Orchestration comparison helpful orientation on control planes and operating models. The following overview shows providers with clear Safety alignment:

| Place | Provider | Features |

|---|---|---|

| 1 | webhoster.de | Managed Docker & Kubernetes, security audit, ISO 27001, GDPR |

| 2 | Hostserver.net | ISO-certified, GDPR, container monitoring |

| 3 | DigitalOcean | Global cloud network, simple scaling, low entry-level prices |

Operational reliability through policies and tests

Without regular Controls ages every security concept. I roll out benchmarks and policies automatically and link them to compliance checks. Chaos and GameDay exercises realistically test isolation, alarms and playbooks. KPIs such as Mean Time to Detect and Mean Time to Recover guide my improvements. I derive measures from deviations and anchor them firmly in the Process.

Node and host hardening: the first line of defense

Secure containers start with secure hosts. I minimize the base OS (no compilers, no debug tools), enable LSMs like AppArmor/SELinux and use cgroups v2 consistently. The kernel remains up-to-date, I deactivate unnecessary modules and I choose hypervisor or MicroVM isolation for particularly sensitive workloads. I secure Kubelet with a deactivated read-only port, client certificates, restrictive flags and a tight firewall environment. Swap remains off, time sources are signed, and NTP drift is monitored - timestamps are important for forensics and Audit critical.

PodSecurity and profiles: Making standards binding

I make security the default setting: I enforce PodSecurity standards cluster-wide and tighten them per namespace. Seccomp profiles reduce syscalls to what is necessary, AppArmor profiles restrict file access. I combine readOnlyRootFilesystem with tmpfs for write requirements and set fsGroup, runAsUser and runAsGroup explicitly. HostPath mounts are taboo or strictly limited to read-only, dedicated paths. I drop capabilities completely by default and only rarely add them specifically. This results in reproducible, minimally privileged Workloads.

Deepening the supply chain: SBOM, provenance and signatures

Scans alone are not enough. I create an SBOM for each build, check it against policies (prohibited licenses, risky components) and record origin data. In addition to the image, signatures also cover metadata and build provenance. Admission controls only allow signed, policy-compliant artifacts and deny :latest tags or mutable tags. In air-gap environments, I replicate the registry, sign offline and synchronize in a controlled manner - integrity remains verifiable, even without a constant Internet connection.

Client separation and resource protection

True multi-tenancy requires more than namespaces. I work with ResourceQuotas, LimitRanges and PodPriority to prevent "noisy neighbors". I separate storage classes according to sensitivity and isolate snapshots per client. The four-eyes principle applies to admin access, sensitive namespaces receive dedicated service accounts and analyzable audit trails. I also tighten egress rules for build and test namespaces and consistently prevent access to production data.

Securing the data path: stateful, snapshots, ransomware resistance

I secure stateful workloads with end-to-end encryption: transport with TLS, at rest in the volume using provider or CSI encryption, key via KMS. I mark snapshots as tamper-proof, adhere to retention policies and test restore paths including app consistency. For ransomware resistance, I rely on unalterable copies and separate Backup-domains. Access to backup repos follows separate identities and strict least privilege so that a compromised pod cannot delete any history.

Service identities and zero trust in the cluster

I anchor identity in the infrastructure, not in IPs. Service identities receive short-lived certificates, mTLS protects service-to-service traffic, and L7 policies only allow defined methods and paths. The linchpin is a clear AuthN/AuthZ model: who talks to whom, for what purpose and for how long. I automate certificate rotation and keep secrets outside the images. This creates a resilient zero-trust pattern that remains stable even with IP changes and autoscaling.

Defuse DoS and resource attacks

I set hard requests/limits, limit PIDs, file descriptors and bandwidth, and I monitor ephemeral storage. Buffers before ingress (rate limits, timeouts) prevent individual clients from blocking the cluster. Backoff strategies, circuit breakers and budget limits in deployment keep errors local. Ingress controllers and API gateways are given separate, scalable nodes - so the control level remains protected when public load peaks occur.

Concrete detection and response

Runbooks are operational. I isolate compromised pods with network policies, mark nodes as unschedulable (cordon/drain), forensically secure artifacts (container file systems, memory, relevant logs) and keep the chain of evidence complete. I automatically rotate secrets, revoke tokens and restart workloads in a controlled manner. After the incident, a review flows back into policies, tests and dashboards - security is a learning cycle, not a one-off action.

Governance, record keeping and compliance

What is certain is what can be proven. I collect evidence automatically: Policy reports, signature checks, scan results, RBAC diffs and compliant deployments. Changes are made via pull requests, with reviews and a clean change log. I link confidentiality, integrity and availability with measurable controls that consist of audits. I separate operations and security as far as possible (segregation of duties) without losing speed - clear roles, clear responsibilities, clear Transparency.

Team enablement and "Secure by default"

I provide "Golden Paths": tested base images, deployment templates with SecurityContext, ready-made NetworkPolicy building blocks and pipeline templates. Developers receive quick feedback loops (pre-commit checks, build scans), security champions in teams help with questions. Threat modeling before the first commit saves expensive fixes later on. The aim is for the secure approach to be the fastest - guardrails instead of gatekeeping.

Performance, costs and stability at a glance

Hardening must match the platform. I measure the overheads of eBPF sensors, signature checks and admission controls and optimize them. Minimal images accelerate deployments, reduce the attack surface and save transfer costs. Registry garbage collection, build cache strategies and clear tagging rules keep the supply chain lean. Security thus remains an efficiency factor rather than a brake.

Conclusion: Security as daily practice

Container security succeeds when I have clear Standards automate them and check them continuously. I start with cleanly hardened images, strict policies and tangible segmentation. Then I keep an eye on runtime signals, train incident response and test recoveries. In this way, attack surfaces shrink and failures remain limited. If you take a systematic approach, you noticeably reduce risks and protect customer data as well as your own Reputation.