Quantum hosting heralds a new era where I accelerate and secure web services with quantum resources - the question is: myth or soon reality for the hosting of tomorrow? I show where quantum hosting today, what hurdles remain and how hybrid models open up the transition to productive data centers.

Key points

The following aspects will give you a quick overview of the opportunities and limitations of Quantum Hosting.

- Performance boostQubits enable enormous acceleration in optimization, AI and analysis.

- SecurityPost-quantum processes and quantum communication strengthen confidentiality.

- HybridClassic servers and quantum nodes work together in a complementary way.

- Maturity levelPilot projects are convincing, widespread use still needs time.

- CompetitionEarly preparation creates a noticeable lead in the market.

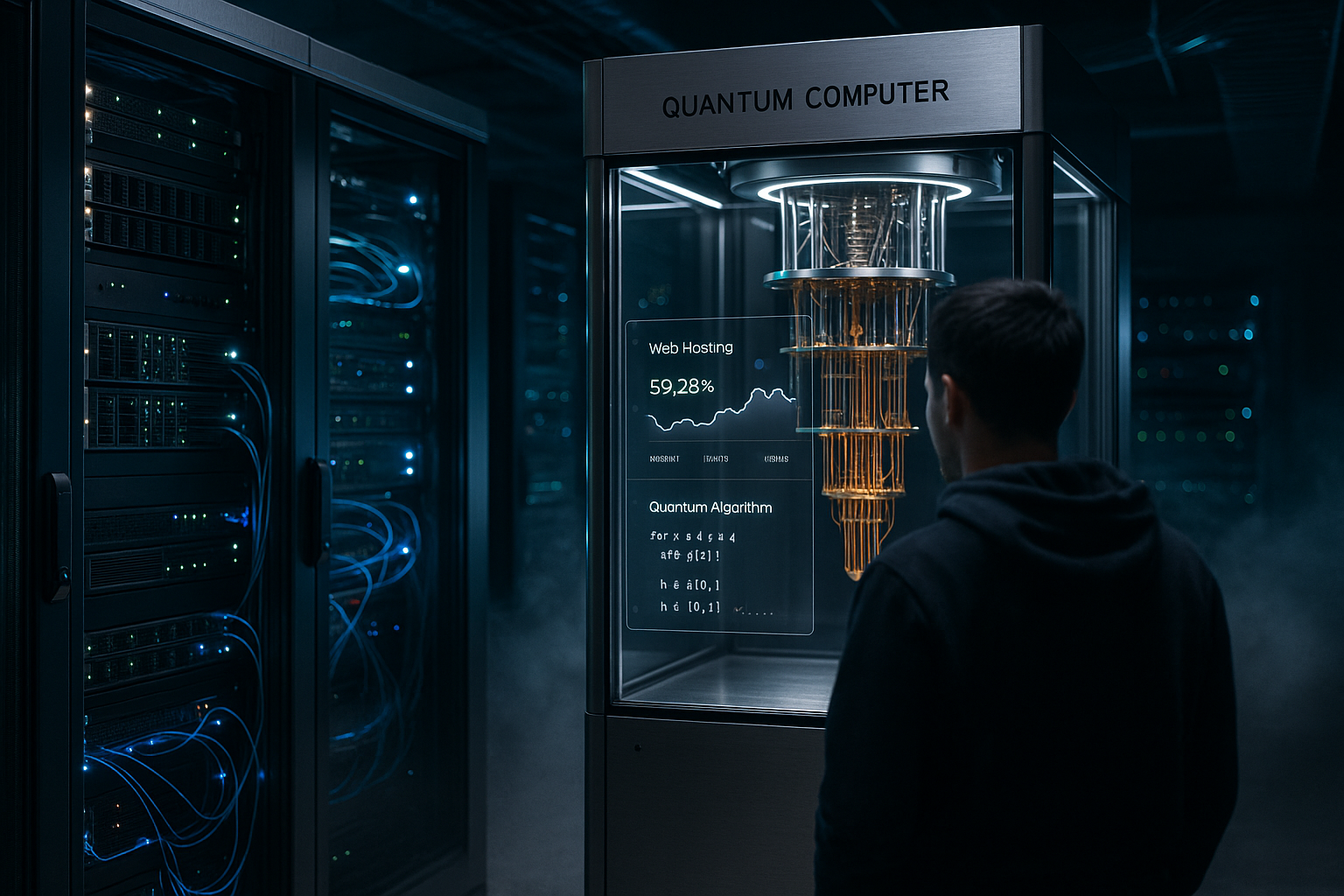

What is behind Quantum Hosting?

I refer to as Quantum Hosting is the skillful coupling of classic infrastructure with quantum resources to solve special computing tasks much faster. These include optimization, AI training, data analysis and cryptographic routines that benefit from superposition and entanglement. As the International Year of Quantum Science and Technology, 2025 marks the increased global focus on deployable solutions [2][7]. While conventional servers will continue to deliver standard services, I will access quantum accelerators for suitable workloads. In this way, I make targeted use of the strengths of both worlds and increase the Benefit for productive scenarios.

Architecture and orchestration in detail

In practice, I build an orchestration layer that distributes workloads according to clear rules. A decision module checks parameters such as latency budget, problem size, data locality and expected accuracy. If the job fits, it is translated into a quantum format, mapped to the respective hardware topology and noise-aware planned. Not every algorithm is „quantum-ready“ - I use hybrid patterns such as variational quantum algorithms or quantum-inspired heuristics that perform pre- and post-processing in the classic way. The fallback level is important: if the quantum path does not fulfill SLOs, the classic solver runs automatically so that the user experience remains stable.

For repeatable deployments, I encapsulate pipelines in containers, separate data paths from the Control flows and maintain interfaces as APIs. A telemetry level provides me with live calibration data, maintenance windows and queue lengths from the quantum providers. This allows me to make data-driven scheduling decisions and maintain SLAs even when hardware quality fluctuates.

Technical basics: qubits, superposition and entanglement

Qubits are the central Unit of a quantum computer and can carry several states simultaneously thanks to superposition. This property allows parallel paths in calculations, which speeds up certain tasks exponentially. Entanglement couples qubits in such a way that one state immediately allows conclusions to be drawn about the other, which I use for optimized algorithms. Error correction remains a major challenge at present, as noise and decoherence interfere with sensitive states. This is precisely where hardware and software innovations aim to optimize the Error percentage and pave the way for data centers.

Potential for web hosting workloads

I use quantum resources where classical systems reach their limits. Boundaries complex optimizations, AI model training, pattern recognition in large data streams and sophisticated cryptography. Research conducted by companies such as D-Wave and IBM shows that quantum processes can noticeably accelerate real-world tasks [3][4]. This opens up new services for hosting providers, such as extremely fast personalization, dynamic price calculation or predictive scaling. Scheduling in data centers can also be improved in this way, as jobs and resources can be allocated more intelligently. The benefit: noticeably shorter response times, better capacity utilization and increased Efficiency during peak loads.

Developer ecosystem and skills

To make this happen, I qualify teams in two steps: First, I provide a solid grounding in quantum algorithms, noise and complexity. Then I focus on toolchains that End-to-end work - from notebook experiments to CI/CD to productive pipelines. I rely on abstraction layers that encapsulate the target hardware and on modular libraries so that I can keep workloads portable. Code quality, tests with simulated error channels and reproducible environments are mandatory. The result is a day-to-day developer routine that takes the special features of qubits into account without operations coming to a standstill.

Rethinking security with post-quantum methods

Future quantum computers could attack today's crypto methods, so I'm putting security strategies in place early on post-quantumsuitable algorithms. These include key agreements and signatures that remain viable even in a quantum era. In addition, the focus is on quantum communication, which allows key exchange with physically verifiable eavesdropping detection. If you want to delve deeper, you will find a compact introduction to Quantum cryptography in hosting. This is how I strengthen confidentiality, integrity and availability - prepared today so that I don't have to worry tomorrow. Gaps to risk.

Compliance, data sovereignty and governance

I classify data consistently and define what information a quantum provider is allowed to see. Sensitive user data remains in my domain; only compressed problem representations or anonymized features are sent to external nodes. Audit trails document when which job ran with which key material. A Crypto inventory lists all the processes used and gives me the basis for a gradual PQC changeover. For regulated industries, I plan for data localization and export controls and anchor these requirements contractually. In this way, compliance remains part of the architecture - not just a late addition.

Myth vs. reality: where do we stand today?

Many things sound impressive, but I make a sober distinction Hype of tangible maturity. Productive quantum servers require extremely low temperatures, highly stable shielding and reliable error correction. These requirements are demanding and make operation more expensive. At the same time, pilot projects are already delivering tangible results, for example in material simulation, route planning or crypto testing [1][2][5][6]. I therefore evaluate each task critically and decide when quantum resources are really needed. Added value and when classic systems will continue to dominate.

Benchmarking and performance measurement

I not only measure raw speed, but also End-to-end-effects: Queue time, transpilation effort, network latency and result quality. A clean, classic baseline comparison is mandatory, including optimized solvers and identical data paths. Only when p95 latency, costs per request and accuracy are better than the status quo is a use case considered suitable. I scale tests step by step - small instances, medium, then near-production sizes - in order to overfitting to avoid mini-problems. I ensure comparability with standardized data sets, defined seed values and reproducible builds.

Hybrid data centers: a bridge to practice

I see the near future in hybrid Architectures, in which classic servers deliver standard services and quantum accelerators take on special tasks. APIs and orchestration layers distribute workloads to where they run fastest and most efficiently. The first platforms already allow quantum jobs via cloud access, which lowers the barrier to entry [3][4][5]. If you want to understand the technical paths, you can find practical background information on Quantum computing in web hosting. This is how I gradually feed quantum resources into productive pipelines without the Operation to disturb.

Operation, reliability and observability

Quantum hardware changes through regular calibrations; I incorporate these signals into my monitoring. Dashboards show me error rates per gate, available qubit topologies, queues and current SLO fulfillment. Canary Runs check new versions with low load before I scale up. If the quality drops, a policy takes effect: deactivating certain backends, switching to alternatives or reverting to purely classic paths. Chaos tests simulate failures so that regular operations remain robust and tickets do not escalate in the middle of peak loads.

Concrete application scenarios in everyday hosting life

I use quantum acceleration for routing optimization in content delivery networks so that content is closer to the user and Latency decreases. For AI-supported security, I analyze anomalies faster and detect attack vectors earlier. Key rotation and certificate verification gain speed, which simplifies large-scale infrastructures. In e-commerce, I also merge recommendations in real time when quantum algorithms nimbly explore large search spaces. This combination of speed and better Decisionquality increases user experience and capacity utilization.

Practice playbook: Use-case deepening and KPIs

For CDNs, I formulate caching and path selection as a combinatorial problem: variation-based methods quickly provide good candidates, which I refine classically. I measure p95/p99 latencies, cache hit rates and costs per delivered gigabyte. In e-commerce workflows, I check shopping cart size, conversion rate and Time-to-first-byte under load. I compare security applications using precision/recall, mean time to detect and false positives. A/B and dark launch strategies ensure that I can statistically prove improvements before I roll them out globally.

Performance, costs, maturity: realistic roadmap

I plan in phases and evaluate for each stage Goals, risks and benefits. Phase one uses cloud access to quantum resources for tests and PoCs. Phase two couples orchestration with SLA criteria so that quantum jobs flow in in a controlled manner. Phase three integrates selected workloads permanently as soon as stability and cost-effectiveness are right. This is how I keep investments controllable and deliver measurable Results into the company.

Cost models, ROI and procurement

I calculate total cost of ownership across the entire chain: developer time, orchestration, data transfer, cloud fees per shot/job and potential priority surcharges. Reserved capacity reduces queues, but increases fixed costs - useful for critical paths, oversized for exploration work. I evaluate ROI based on real business metrics: Revenue growth, cost reduction per request, energy consumption and avoided hardware investments. So that I can Vendor lock-in I rely on portable descriptions, clear exit clauses and multi-source strategies. On the contractual side, I ensure service levels, data deletion and traceability in writing.

Market and providers: Who is preparing?

Many providers are observing the development, but I recognize clear Differences in the speed and depth of preparation. Early testing, partnerships with research institutions and team training are paying off. Providers who open up pilot paths now will give themselves a head start at go-live. This also includes the ability to identify and prioritize customer scenarios appropriately. The following table shows an exemplary classification of the innovation focus and the Preparation:

| Ranking | Provider | Innovation focus | Quantum Hosting Preparation |

|---|---|---|---|

| 1 | webhoster.de | Very high | Already in research & planning |

| 2 | Provider B | High | First pilot projects |

| 3 | Provider C | Medium | Research partnerships |

Risks and countermeasures

In addition to technical risks (noise, availability), I address organizational and legal dimensions. I deliberately plan with hardware obsolescence so that I don't get stuck with outdated interfaces later on. One multi-vendor Strategy with abstracted drivers reduces dependencies. On the security side, I test PQC implementations including key lifecycles and maintain crypto-agility. Operationally, I define a „kill switch“ that immediately deactivates quantum paths in the event of anomalies and switches the service to classic systems. Transparent internal and external communication ensures trust if, contrary to expectations, benchmarks show no advantage.

Quantum communication and the coming quantum internet

Quantum communication complements the hosting stack with more physical Security, for example through tap-proof key exchange. This lays the foundation for reliable data channels between data centers and edge locations. In the long term, the quantum internet will connect nodes with new protocols that add protection mechanisms to traditional networks. If you would like to read more about this, start with this overview of the Quantum Internet. This creates a security network that goes beyond pure software and is based on Physics sets.

Summary: From buzzword to workbench

Quantum Hosting is gradually leaving the Theory and moves into the first productive paths. I combine classic servers for standard tasks with quantum resources for special cases that deserve real acceleration. I think ahead in terms of security with post-quantum processes so that I don't come under pressure later. If you test early, you define standards, train teams and save time during the transition. This is how a vision becomes a toolbox that hosts performance, Security and efficiency.