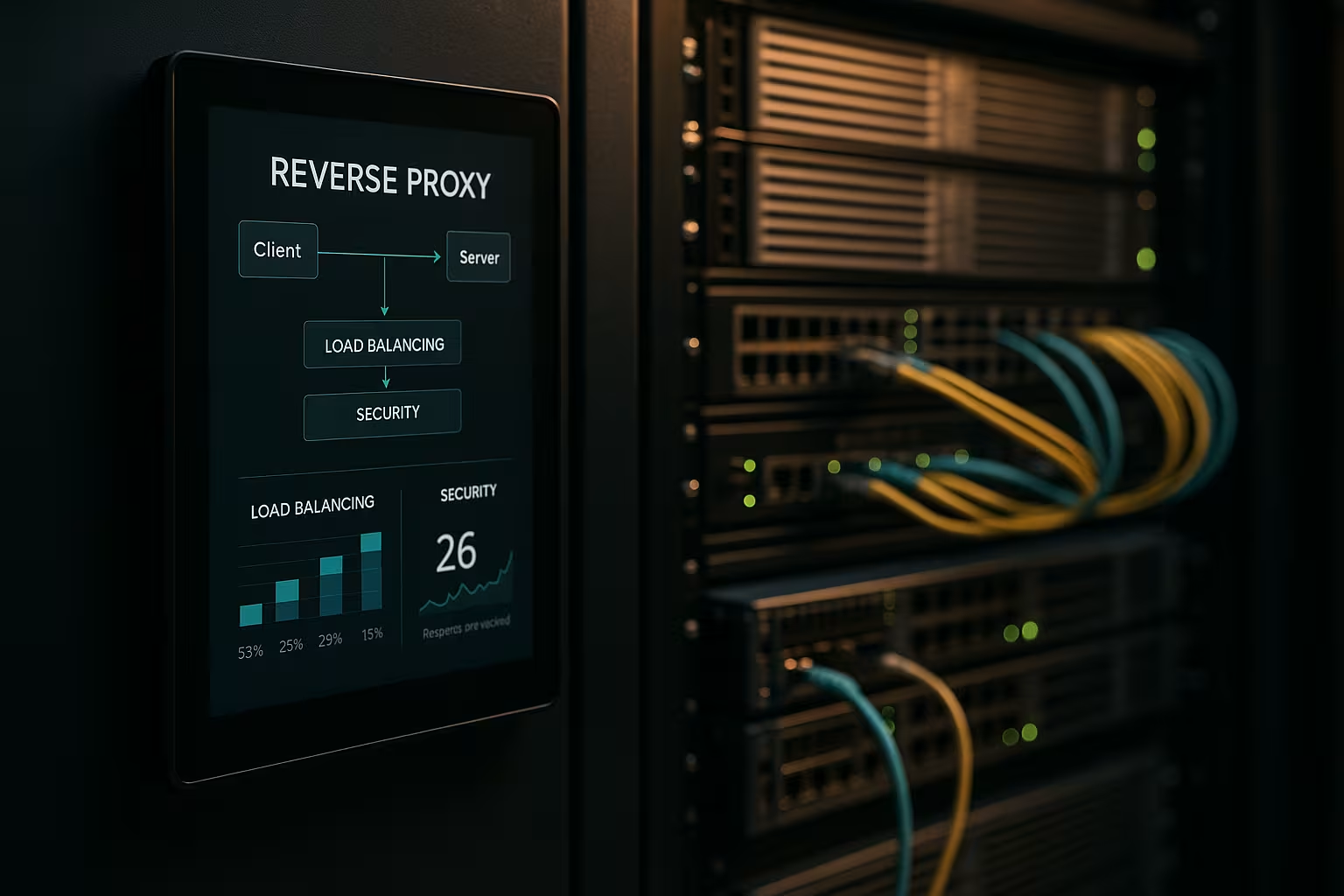

A reverse proxy architecture accelerates requests, protects backend systems and scales web applications without modifying the app servers. I show how a Reverse proxy measurably improves performance, security and scaling in day-to-day operations.

Key points

- Performance through caching, SSL offloading and HTTP/2/3

- Security via WAF, DDoS protection and IP/geo-blocking

- Scaling with load balancing and health checks

- Control thanks to centralized routing, logging and analysis

- Practice with NGINX, Apache, HAProxy, Traefik

What does a reverse proxy architecture do?

I set the Reverse proxy in front of the application servers and have it terminate all incoming connections. In this way, I encapsulate the internal structure, keep IPs hidden and minimize direct attack surfaces. The proxy decides which service takes over the request and it can cache content. It takes care of TLS, compression and protocol optimizations such as HTTP/2 and HTTP/3. This noticeably reduces the load on the app servers and gives me a place for guidelines, evaluations and quick changes.

Performance optimization: caching, offloading, edge

I combine CachingSSL offloading and edge delivery to reduce latencies. I serve common assets such as images, CSS and JS from the cache, while dynamic parts remain fresh (e.g. fragment caching). I use policies such as stale-while-revalidate and stale-if-error to reduce waiting times and ensure delivery in the event of disruptions. TLS 1.3, HTTP/2 push replacement via early hints and Brotli compression provide additional acceleration. For international users, the proxy routes to nearby nodes, which reduces the time to first byte. A look at suitable Advantages and application scenarios shows which adjustments are worthwhile first.

Improving the security situation: WAF, DDoS, geo-blocking

I analyze traffic on Proxy and filter malicious requests before they reach backend systems. A WAF recognizes patterns such as SQL injection or XSS and stops them centrally. TLS termination enables inspection of the encrypted data stream, after which I forward it cleanly. DDoS defense depends on the proxy, which distributes, limits or blocks requests without touching applications. Geo and IP blocking cuts off known sources, while rate limits and bot detection curb abuse.

Scaling and high availability with load balancing

I distribute load over Load Balancing algorithms such as Round Robin, Least Connections or weighted rules. I secure sticky sessions using cookie affinity if sessions need to remain bound to a node. Health checks actively check services so that the proxy automatically removes defective targets from the pool. Horizontal scaling works in minutes: register new nodes, renew configuration, done. For tool selection, a short Load balancing tools comparison with a focus on L7 functions.

Central management and precise monitoring

I collect logs centrally at Gateway and measure key figures such as response times, throughput, error rates and TTFB. Dashboards show hotspots, slow endpoints and traffic peaks. Header analyses (e.g. cache hit, age) help to fine-tune cache strategies. Correlation IDs allow me to track requests across services and speed up root cause analysis. I set uniform policies for HSTS, CSP, CORS and TLS profiles once at the proxy instead of separately in each service.

Routes, rules and releases without risk

I control Routing based on host name, path, headers, cookies or geo-information. This allows me to route APIs and frontends separately, even if they run on the same ports. I implement Blue-Green and Canary releases directly on the proxy by directing small user groups to new versions. Feature flag headers help with controlled tests under real traffic. I keep maintenance windows short because I switch routes in seconds.

Technology comparison in practice

I choose the Toolthat matches the load, protocol and operating objectives. NGINX scores with static content, TLS, HTTP/2/3 and efficient reverse proxy functions. Apache shines in environments with .htaccess, extensive modules and legacy stacks. HAProxy provides very strong L4/L7 balancing and fine control over health checks. Traefik integrates well in containerized setups and reads routes dynamically from labels.

| Solution | Strengths | Typical applications | Special features |

|---|---|---|---|

| NGINX | High Performance, HTTP/2/3, TLS | Web front-ends, APIs, static delivery | Brotli, caching, TLS offloading, stream module |

| Apache | Modular Flexibility.htaccess | Legacy stacks, PHP-heavy installations | Many modules, fine access handling |

| HAProxy | Efficient Balancing, Health Checks | L4/L7 load balancer, gateway | Very granular, sophisticated ACLs |

| Traefik | Dynamic Discovery, Container focus | Kubernetes, Docker, microservices | Auto-configuration, LetsEncrypt integration |

Implementation steps and checklist

I start with TargetsPrioritize performance, security, availability and budget. I then define protocols, certificates, cipher suites and protocol versions. I clearly define and version routing rules, caching policies and limits. I set up health checks, observability and alerts before going live. If you want to get started right away, you can find an instructional overview at Set up reverse proxy for Apache and NGINX.

Best practices for performance tuning

I activate HTTP/3 with QUIC where clients support it, and keep HTTP/2 ready for broad compatibility. I use Brotli for text resources and let the proxy compress images efficiently. I deliberately define cache keys to control variations through cookies or headers. I minimize TLS handshake times, use session resumption and set OCSP stapling. I use early hints (103) to give the browser advance signals for critical resources.

Safety setup without friction losses

I hold Certificates centrally and automate renewals with ACME. HSTS enforces HTTPS, while CSP and CORP control content. I start a WAF rulebase conservatively and tighten it gradually to avoid false alarms. Rate limits, mTLS for internal services and IP lists reduce the risk on a day-to-day basis. Audit logs remain tamper-proof so that I can trace incidents with legal certainty.

Costs, operation and ROI

I am planning Budget for server resources, certificates, DDoS protection and monitoring. Small setups often start with a few virtual cores and 4-8 GB RAM for the proxy, which is in the low double-digit euro range per month, depending on the provider. Larger fleets use dedicated instances, anycast and global nodes, which can mean three-digit euro costs per location. Central administration saves time: fewer individual configurations, faster release processes and shorter downtimes. The ROI is reflected in higher conversion, lower bounce rates and more productive engineering.

Architecture variants and topologies

I choose the architecture to match the risk and latency profile. Simple environments work well with a single Gateway in the DMZ, which forwards requests to internal services. In regulated or large environments, I separate frontend and backend proxies into two stages: Stage 1 terminates Internet traffic and handles WAF, DDoS and caching, stage 2 routes internally, speaks mTLS and enforces zero trust principles. Active/active setups with anycast IP and globally distributed nodes reduce failover times and optimize proximity to the user. For CDNs in front of the reverse proxy, I pay attention to correct header forwarding (e.g. X-Forwarded-Proto, Real-IP) and coordinated cache hierarchies so that the edge and gateway cache do not block each other. I encapsulate multi-tenant scenarios using SNI/TLS, separate routes and isolated rate limits to avoid neighborhood effects.

Protocols and special cases: WebSockets, gRPC and HTTP/3

I consider protocols with special requirements so that features remain stable. For WebSockets I activate upgrade support and long-lived connections with suitable timeouts. gRPC benefits from HTTP/2 and clean headers; I avoid H2C (plain text HTTP/2) at the perimeter in favor of TLS with correct ALPN. For HTTP/3 I provide QUIC ports (UDP) and only release 0-RTT restrictively, as replays harbor risks. Streaming endpoints, server-sent events and large uploads are given their own buffering and body-size policies so that the proxy does not become a bottleneck. For protocol translations (e.g. HTTP/2 outside, HTTP/1.1 inside), I thoroughly test header normalization, compression and connection reuse to keep latencies low and resource consumption predictable.

Authentication and authorization at the gateway

I relocate Auth-decisions to the reverse proxy, if architecture and compliance allow. I integrate OIDC/OAuth2 via token verification at the gateway: the proxy validates signatures (JWKS), checks expiry, audience and scopes and sets verified claims as headers for the services. I use API keys for machine-to-machine endpoints and limit them by route. For internal systems, I rely on mTLS with mutual certificate verification to make trust explicit. I take care not to log sensitive headers (authorization, cookies) unnecessarily and use allow/deny lists per route. I formulate fine-grained policies via ACLs or expressions (e.g. path + method + claim), which allows me to control access centrally without changing application code.

Resilience: timeouts, retries, backoff and circuit breaking

I define Timeouts aware per hop: connection establishment, header timeout and response timeout. I only activate retries for idempotent methods and combine them with exponential backoff plus jitter to avoid thundering herds. Circuit breakers protect backend pools: If the proxy detects error or latency spikes, it opens the circuit temporarily, forwards only randomly to the affected destination and otherwise responds early, optionally with fallback from the cache. Outlier detection automatically removes "weak" instances from the pool. I also limit simultaneous upstreams, activate connection reuse and use queues with fair prioritization. In this way, services remain stable even if individual components come under pressure.

Compliance, data protection and PII protection

I treat the proxy as Data hub with clear data protection rules. I mask or pseudonymize personal data in logs; query strings and sensitive headers are only logged on a whitelisting basis. I shorten IP addresses where possible and adhere to strict retention periods. Access to logs and metrics is role-based and changes are documented in an audit-proof manner. For audits, I link gateway events with change management entries so that approvals and rule updates can be tracked. This allows me to meet compliance requirements without sacrificing deep insights into performance and security.

Kubernetes, Ingress and Gateway API

I integrate the reverse proxy seamlessly into Container orchestration. In Kubernetes, I use ingress controllers or the more modern gateway API to describe routing, TLS and policies declaratively. Traefik reads labels dynamically, NGINX/HAProxy offer sophisticated ingress variants for high throughput. I separate cluster-internal east/west routing (service mesh) from the north/south perimeter gateway so that responsibilities remain clear. I implement canary releases with weighted routes or header matches, while strictly defining health checks and pod readiness to avoid flapping. I version configurations as code and test them in staging clusters with load simulation before putting them into production.

Operational maturity: configuration management and CI/CD

I treat the proxy configuration as Code. Changes run via pull requests, are automatically tested (syntax, linting, security checks) and rolled out in pipelines. I use previews or shadow traffic to validate new routes under real conditions without risking customer transactions. Rollbacks are possible in seconds because I tag versions and deploy them atomically. I manage sensitive secrets (certificates, keys) separately, encrypted and with minimal authorizations. For high availability, I distribute releases to nodes in stages and record the effects in dashboards so that I can quickly take countermeasures in the event of regressions.

Typical stumbling blocks and anti-patterns

I avoid Sources of errorthat frequently occur in practice. I prevent cache poisoning with strict header normalization and clean Vary management; I exclude cookies that do not affect rendering from the cache key. I recognize redirect loops early on by testing with X-Forwarded-Proto/Host and consistent HSTS/CSP policies. "Trust all X-Forwarded-For" is taboo: I only trust the next hop and set Real-IP cleanly. I control large uploads via limits and streaming so that the proxy does not buffer, which the backend can do better. With 0-RTT in TLS 1.3, I pay attention to idempotency. And I keep an eye on body and header sizes so that individual requests don't tie up the entire worker capacity.

Summary for quick decisions

I am betting on a Reverse proxy because it combines speed, protection and scaling in one place. Caching, TLS offloading and HTTP/2/3 significantly accelerate real loading times. WAF, DDoS defense and IP/geo control noticeably reduce risks. Load balancing, health checks and rolling releases keep services available, even during growth. With NGINX, Apache, HAProxy or Traefik, I find a clear solution for every setup and keep operations manageable.