Webhosting RAM determines how many concurrent processes a page carries and how smoothly requests are processed, while CPU and I/O determine the speed of calculations and data flows. I explain how much RAM makes sense, how RAM size, CPU performance and I/O speed influence each other and what priorities I set in practice.

Key points

In advance I will summarize the most important findings briefly and succinctly.

- RAM size determines how many processes run in parallel.

- CPU limits calculations per second, even with a lot of RAM.

- I/O speed determines fast data access and caching benefits.

- Peaks are more critical than average values for sizing.

- Scaling beats oversizing in terms of costs and efficiency.

What is RAM in web hosting - briefly explained

RAM serves as a fast short-term memory for the server, in which running processes, cache content and active sessions are stored. I always benefit from RAM when many PHP workers, database queries or caching layers are active in parallel and need fast access. Missing Memoryapplications reach their limits, processes abort and the server has to aggressively swap to the slower disk. This leads to a loss of time, higher response times and errors during uploads, backups or image processing. With sufficient Buffer I can handle peak loads, keep sessions in memory and enable smooth CMS workflows.

Why "free" RAM is rarely really free

Unused RAM is rarely wasted in productive operation. Modern operating systems use free memory as a file system cache to keep frequently read files, static assets and database pages in memory. This reduces I/O accesses and stabilizes latencies. In monitoring tools, this often appears as if there is "little free", although the memory is freed up immediately when required. I therefore not only evaluate "free", but above all "available" or the proportion that the system can release at short notice. If the proportion remains permanently low and I/O wait increases, this is an indication of real memory pressure and the risk of Thrashing (constant swapping/storage). A healthy buffer for file cache has a direct impact on CMS and store performance.

Estimating RAM size: from blog to store

Larger is not automatically better, because unused RAM only costs money and has no effect. I start with a realistic size, measure load peaks and scale up instead of blindly overbidding. Small sites often run well with 1 GB, while CMS with many plugins, WooCommerce stores or forums quickly require 2-4 GB or more. Simultaneous users, import and image processes, caching strategy and database workloads are important. Those who plan capacitatedavoids 500 errors, timeout chains and expensive oversizing.

| Website type | Recommended RAM size |

|---|---|

| Simple static page | 64-512 MB |

| Small CMS website | 1 GB |

| Middle company side | 2-4 GB |

| Elaborate webshop | 4-8 GB+ |

| Large community platform | 8 GB+ |

PHP memory limit, workers and real upper limits

PHP memory limits define the upper limit per request, not the actual consumption. A 256 MB limit does not mean that every process uses 256 MB - many are well below this, but individual peaks can be exhausted. For PHP-FPM I calculate the number of workers via the average consumption per request: I measure real load cases (frontend, checkout, admin) and then set pm.max_children so that there is enough space for the web server, database, caches and file cache. I also limit pm.max_requeststo mitigate creeping leaks. OPcache, object cache (e.g. in RAM) and database buffer require their own budgets, which I include in the overall calculation. The result: stable throughput, fewer 502/503 errors and highly predictable latencies.

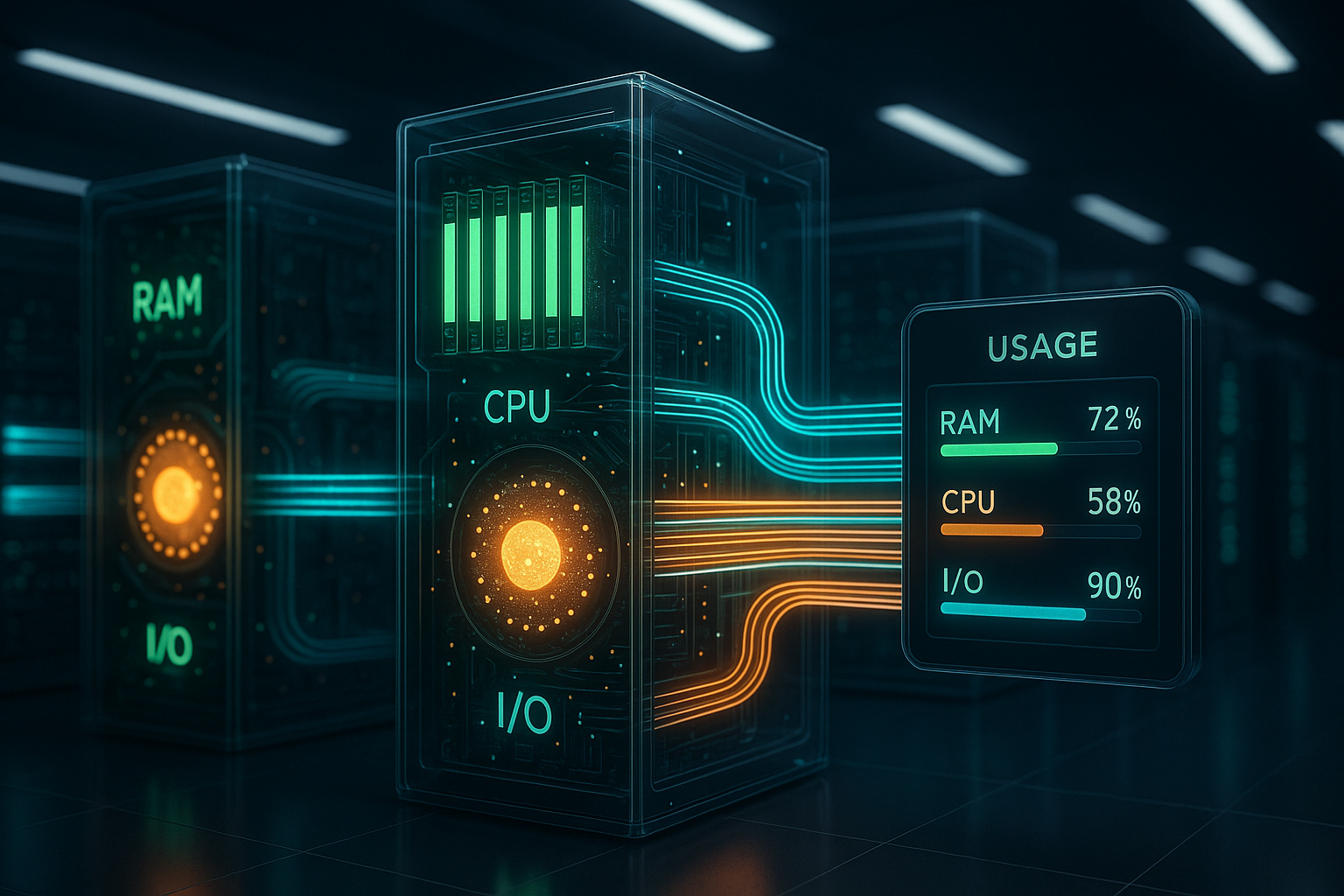

RAM vs. CPU vs. I/O: the interplay

Balance beats single value - a lot of RAM is of little use if the CPU does not calculate fast enough or slows down I/O. A strong CPU processes PHP requests, compression and data conversions quickly, making better use of RAM caches and databases. If the CPU is weak, requests jam, even if memory remains free. I/O speed determines how fast data flows between memory, SSD/NVMe and network; slow I/O eats up RAM advantages. I also check the CPU's thread strategy, because Single-thread vs. multi-core influences how well my stack works in parallel.

Practical priorities in tuning

- First cachePage cache before database, OPcache before CPU tuning, object cache before RAM increase.

- Then throughput: Set the number of PHP workers to match the CPU and RAM; eliminate slow queries before scaling.

- I/O brakes solve: Log rotation, decouple image jobs, shift backup time windows to low-traffic phases.

- RAM buffer keep for file cache: I avoid aggressive utilization so that read accesses remain fast.

- Protect limitssensible upload limits, timeout limits and queueing instead of parallel excesses.

Recognize and avoid typical bottlenecks

Symptoms reveal the cause: 500 errors, empty pages or failed uploads often indicate RAM or PHP memory limits. If the I/O wait increases, the server is probably writing from RAM to disk and losing time. Slow backend during image processing indicates too little RAM or too slow I/O. I use monitoring for RAM utilization, I/O wait, CPU load and response times to assess trends rather than snapshots. It is often enough to Increase PHP memory limitcaching and remove unnecessary plug-ins before hardware upgrades become necessary.

Monitoring in practice: what I actually measure

Close to the system I monitor usable memory ("available"), file cache share, swap usage, I/O wait and context switches. At application level, I am interested in PHP worker utilization, queue lengths, OPcache hit rate and object cache hit rates. In the database, I check buffer sizes, the size of temporary tables and the number of simultaneous connections. Combined with response time distributions (median, P95), I can see whether a few heavy requests are breaking off or whether the entire stack is buckling under load. I define warning thresholds with hysteresis (e.g. 80% RAM > 10 minutes) to avoid false alarms and correlate peaks with cron jobs, imports or backups.

WordPress, plugins and databases: What really eats up RAM?

WordPress benefits from RAM primarily through object cache, image processing, backups and plugin diversity. Each plugin loads code and data, increases the PHP memory budget and can maintain transients or caches. Media workflows require additional memory when multiple sizes are generated or WebP formats are built. Databases need buffers for indexes and queries; if the number of simultaneous users increases, these buffers grow with them. That's why I keep air to the top, optimize query plans, minimize plugin overhead and use OPcache and object caching in a targeted manner so that Storage load remains plannable.

Correctly dimension OPcache, page cache and object cache

OPcache reduces CPU and I/O load, but needs a few hundred MB for large code bases. I pay attention to sufficient memory_consumption and the proportion of interned strings so that no recompiling is forced. The Pagecache shifts load from CPU/DB to RAM/storage - ideal for recurring page views. Too short TTLs give away opportunities, too long lead to stale content; I balance TTLs based on the change frequency. The Object cache (e.g. persistent in RAM) massively reduces database hits, but requires clearly defined sizes and an eviction strategy. If the hit rate drops as RAM usage increases, I allocate more memory or slim down cache keys so that hot data remains in memory.

Practical guide: How to calculate RAM realistically

Procedure instead of rates: I check the current peak load, i.e. requests per second, concurrent users and the heaviest processes over the course of the day. I then determine the typical RAM consumption per PHP worker and per cron/import job and add safety margins for peaks. I take into account the file size and number of images for uploads, as thumbnails and conversions tie up memory. For WordPress, I use at least 1 GB, for WooCommerce and sites with many extensions often 2-4 GB, and significantly more for high traffic. An upgrade option remains important so that I can as required scale upwards without downtime.

Sample calculation: from RAM to the number of PHP workers

Acceptance2 GB RAM in total. I reserve a conservative 700-800 MB for the operating system, web server, OPcache, object cache and file cache. This leaves ~1.2 GB available for PHP workers and peaks. Measurement results in 120 MB per request on average, individual peaks up to 180 MB.

- Baseline1.2 GB / 180 MB ≈ 6 workers in the worst case.

- Real operation1.2 GB / 120 MB ≈ 10 workers, I set 8-9 to leave room for peaks and background jobs.

- pm.max_requests to 300-500 to smooth out leaks and fragmentation.

If the load increases, I first increase RAM (more buffer, higher number of workers), then CPU cores (more parallel processing) and finally I/O capacity if I/O wait increases. For imports or image jobs, I throttle parallelism so that frontend users do not suffer.

I/O speed: SSD vs. NVMe in hosting

I/O determines how well RAM caches work, how fast databases deliver and how quickly backups run. NVMe drives offer significantly lower latencies than classic SSDs and therefore reduce the load on memory and CPU because less maintenance is required. Anyone who moves a lot of small files, logs or sessions will notice this immediately in the backend and when loading pages. I check provider profiles for NVMe storage and sensible I/O limits so that the stack is not throttled in the wrong place. I go into more detail about media and latencies in the comparison SSD vs. NVMebecause storage technology Throughput significantly influenced.

Swap, OOM killer and safe buffers

Swap is not a performance feature, but an airbag. A small swap area can buffer short peaks and keep the OOM killer that abruptly terminates processes. However, permanent swaps mean massive I/O loss and increasing latencies. The damage is less on NVMe than on slow SSDs, but remains noticeable. I keep swappiness moderate, plan sufficient RAM buffers and monitor swap usage; if it occurs regularly, I scale or equalize jobs. In shared or container environments, cgroup limits apply - overruns lead to OOM events more quickly there, which is why conservative worker numbers and hard limits are particularly important.

Scaling instead of oversizing: Upgrade strategies

Scaling saves costs and keeps performance predictable. I start with a conservative RAM size, define clear threshold values (e.g. 80% utilization over 10 minutes) and then plan an upgrade. At the same time, I optimize cache TTLs, reduce unnecessary cron intervals and relieve the database via indexes and query caching. If traffic grows unexpectedly, I first increase RAM for buffers, then CPU cores for throughput and finally I/O capacity if waiting times increase. Keeping an eye on this sequence avoids bad investments and strengthens the Response time under load.

Scaling variants: Shared, VPS, Dedicated, Cluster

Shared hosting offers convenience, but hard limits on RAM, CPU and I/O; good for small to medium-sized projects with solid caching. VPS gives more control over RAM allocation, PHP-FPM, OPcache and caches - ideal if I want to fine-tune workers and services. Dedicated provides maximum reserves and constant I/O, but is only worthwhile for permanently high loads or special requirements. Cluster scales horizontally, but requires stateless design: moving sessions from RAM to central memory, synchronizing media and invalidating caches. For WordPress/shop stacks, I plan object cache and sessions outside the web server so that additional nodes do not fail due to RAM-related states.

Performance checks: key figures that I check regularly

Metrics make bottlenecks visible and show where upgrades really help. I monitor memory usage, page cache and object cache hit rate, I/O wait, CPU load (1/5/15) and median and P95 response times. A falling cache hit rate with increasing RAM utilization suggests that more memory should be allocated to caches. High I/O wait with free CPU reserves indicates storage bottle necks that NVMe or better limits can solve. If PHP workers are permanently utilized, I increase CPU cores or reduce expensive requests so that Throughput times sink.

Alerting and traces: setting thresholds sensibly

Notifications I plan carefully: RAM > 85% and I/O wait above a defined threshold only trigger if the condition lasts longer. I track P95/P99 instead of just the median so that outliers become visible. For the database, I use slow query analyses and connection peaks; in PHP, I monitor the biggest memory offenders and limit their lifetime via pm.max_requests. In maintenance windows, I compare traces before and after changes to separate real improvements from measurement noise. In this way, I prevent blind RAM upgrades when it is actually a matter of caching, indices or I/O limits.

Provider selection: What I look for in RAM offers

Selection I succeed faster if I set clear criteria: RAM scaling in small steps, fair I/O limits, current CPU generations and NVMe storage. A good tariff allows flexible upgrades, provides transparent metrics and offers sufficient PHP workers. For productive CMS and store stacks, I prefer options from 2-4 GB RAM with room upwards, depending on peak behavior. In many comparisons, webhoster.de stands out positively because RAM options, CPU equipment and NVMe storage come together to form a coherent overall package. This is how I secure Performance without time-consuming migrations for growing projects.

Briefly summarized: My recommendation

Priorities I do this: first measure bottlenecks, then balance RAM, CPU and I/O in a targeted manner. I plan at least 1 GB for WordPress, 2-4 GB for larger stores or communities and significantly more for real peaks, always with an upgrade option. CPU performance and NVMe storage increase the benefits of RAM because calculations run faster and data arrives more quickly. I consistently keep an eye on monitoring, cache strategy and plug-in hygiene before I increase hardware. With this approach, I achieve a reliable performance, keep costs under control and remain scalable at all times.